How sounds can turn us on to the wonders of the universe

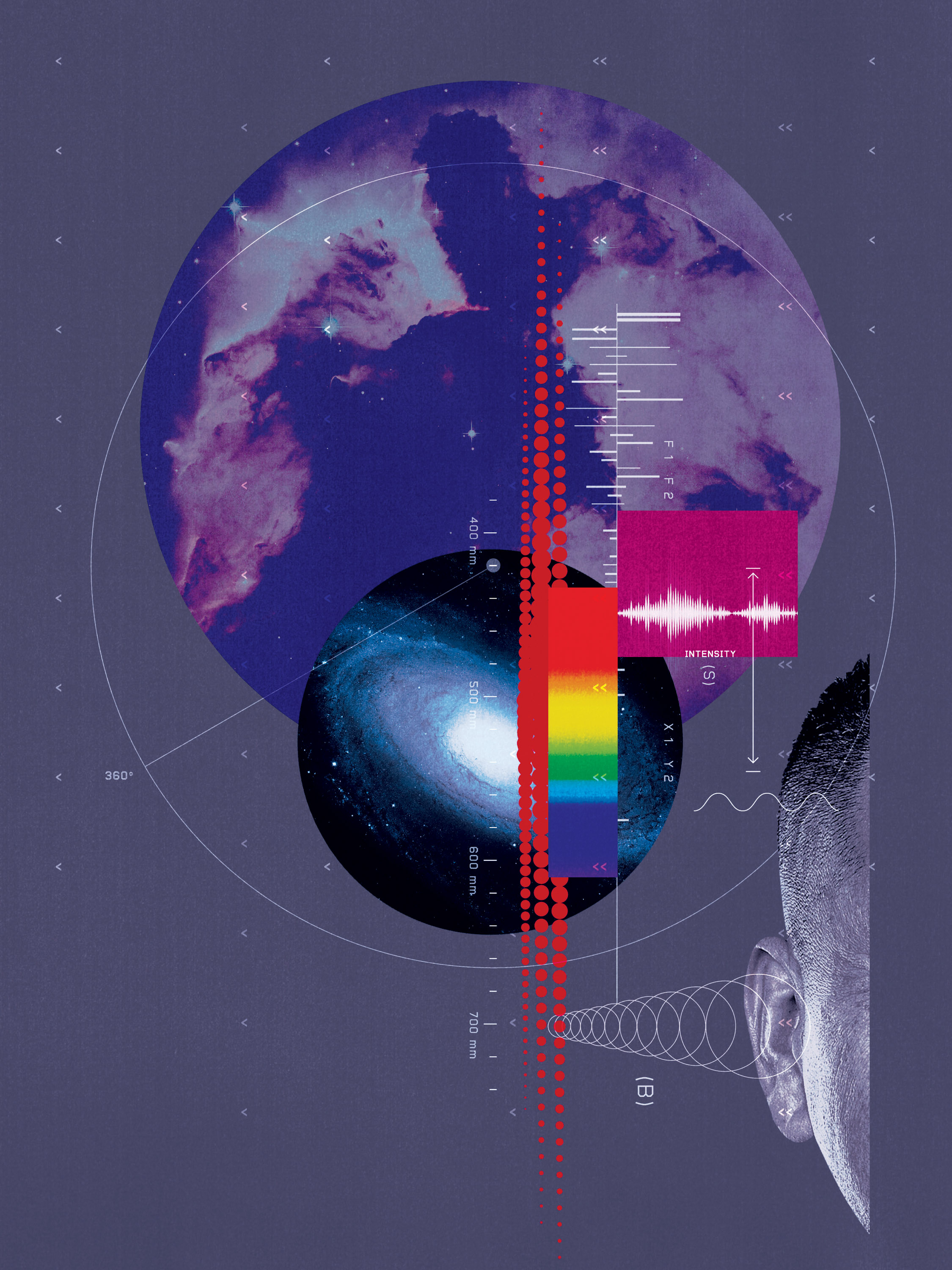

Astronomy is leading the way in making science more accessible through sonification—and the results sound amazing.

In the cavernous grand ballroom of the Seattle Convention Center, Sarah Kane stood in front of an oversize computer monitor, methodically reconstructing the life history of the Milky Way. Waving her shock of long white hair as she talked (“I’m easy to spot from a distance,” she joked), she outlined the “Hunt for Galactic Fossils,” an ambitious research project she’d recently led as an undergraduate at the University of Pennsylvania. By measuring the composition, temperature, and surface gravity of a huge number of stars, she’d been able to pick out 689 of them that don’t look like the others. Those celestial outliers apparently formed very early in the history of the universe, when conditions were much different from those today. Identifying the most ancient stars, Kane explained, will help us understand the evolution of our galaxy as a whole.

Kane’s presentation, which took place at the January 2023 meeting of the American Astronomical Society, unfolded smoothly, with just two small interruptions. Once she checked to make sure nobody was disturbing her guide dog. The other time, she asked one of the onlookers to help her highlight the correct chart on the computer screen, “since of course I can’t see the cursor.”

Astronomy should, in principle, be a welcoming field for a legally blind researcher like Kane. We are long past the era of observers huddling at the eyepiece of a giant telescope. Today, most astronomical studies begin as readings of light broken down by intensity and wavelength, digitized and sorted in whatever manner proves most useful. But astronomy’s accessibility potential remains largely theoretical; across the board, science is full of charts, graphs, databases, and images that are designed specifically to be seen. So Kane was thrilled three years ago when she encountered a technology known as sonification, designed to transform information into sound. Since then she’s been working with a project called Astronify, which presents astronomical information in audio form. “It is making data accessible that wouldn’t otherwise be,” Kane says. “I can listen to a sonification of a light curve and understand what’s going on.”

Sonification and data accessibility were recurring themes at the Seattle astronomy meeting. MIT astrophysicist Erin Kara played sonic representations of light echoing off hot gas around a black hole. Allyson Bieryla from the Harvard-Smithsonian Center for Astrophysics presented sonifications designed to make solar eclipses accessible to the blind and visually impaired (BVI) community. Christine Limbfrom Lincoln Universitydescribed a proposal to incorporate sonification into astronomical data collected by the $600 million Rubin Observatory in Chile, scheduled to open in 2024. The meeting was just a microcosm of a bigger trend in science accessibility. “Astronomy is a leading field in sonification, but there’s no reason that work couldn’t be generalized,” Kane says.

Sure enough, similar sonification experiments are underway in chemistry, geology, and climate science. High schools and universities are exploring the potential of auditory data displays for teaching math. Other types of sonification could assist workers in hazardous and high-stress occupations, or make urban environments easier to navigate. For much of the public, these innovations will be add-ons that could improve quality of life. But in the United States alone, an estimated 1 million people are blind and another 6 million are visually impaired. For these people, sonification could be transformative. It could open access to education, to once unimaginable careers, even to the secrets of the universe.

Visual depictions of statistical data have a deep history, going back at least to 1644, when the Dutch astronomer Michael Florent van Langren created a graph showing different estimates of the distance in longitude between Rome and Toledo, Spain. Over the centuries, mathematicians and scientists have developed graphical standards so familiar that nobody stops to think about how to interpret a trend line or a pie chart. Proper sonification of data, on the other hand, did not begin until the 20th century: the earliest meaningful example was the Geiger counter, perfected in the 1920s, its eerie clicks signifying the presence of dangerous ionizing radiation. More recently, doctors embraced sound to indicate specific medical readings; the beep-beep of an electrocardiogram is perhaps the most iconic (unless you count Monty Python’s medical device that goes bing!). Current applications of sonic display are still mostly specialized, limited in scope, or both. For instance, physicists and mathematicians occasionally use audio analysis, but mostly to express technical operations such as sorting algorithms. At the consumer end, many modern cars produce sounds to indicate the presence of another vehicle in the driver’s blind spot, but those sonifications are specific to one problem or situation.

“Astronomy is a leading field in sonification, but there’s no reason that work couldn’t be generalized.”

Sarah Kane

Niklas Rönnberg, a sonification expert at Linköping University in Sweden, has spent years trying to figure out how to get sound-based data more widely accepted, both in the home and in the workplace. A major obstacle, he argues, is the continued lack of universal standards about the meaning of sounds. “People tend to say that sonification is not intuitive,” he laments. “Everyone understands a line graph, but with sound we are struggling to reach out.” Should large numbers be indicated by high-pitched tones or deep bass tones, for example? People like to choose personalized tones for something as simple as a wake-up alarm or a text-message notification; getting everyone to agree on the meaning of sounds linked to dense information such as, say, the weather forecast for the next 10 days is a tall order.

Bruce Walker, who runs the Sonification Lab at Georgia Tech University, notes another barrier to acceptance: “The tools have not been suitable to the ecosystems.” Auditory display makes no sense in a crowded office or a loud factory, for instance. At school, sound-based education tools are unworkable if they require teachers to add speakers and sound cards to their computers, or to download proprietary software that may not be compatible or that might be wiped away by the next system update. Walker lays some of the blame at the feet of researchers like himself. “Academics are just not very good at tech transfer,” he says. “Often we have these fantastic projects, and they just sit on the shelf in somebody’s lab.”

Yet Walker thinks the time is ripe for sonification to catch on more widely. “Almost everything nowadays can make sound, so we’re entering a new era,” he says. “We might as well do so in a way that’s beneficial.”

Seizing that opportunity will require being thoughtful about where sonification is useful and where it is counterproductive. For instance, Walker opposes adding warning sounds to electric vehicles so they’re easier to hear coming. The challenge, he argues, is to make sure EVs are safe around pedestrians without adding more noise pollution: “The quietness of an electric car is a feature, not a defect.”

There is at least one well-proven path to getting the general public excited about data sonification. Decades before Astronify came along, some astronomers realized that sound is a powerful way to communicate the wonder of the cosmos to a wide audience.

Bill Kurth, a space physicist at the University of Iowa, was an early proponent of data sonification for space science. Starting in the 1970s, he worked on data collected by NASA’s Voyager probes as they flew past the outer planets of the solar system. Kurth studied results from the probes’ plasma instruments (which measured the solar wind crashing into planetary atmospheres and magnetic fields) and started translating the complex, abstract signals into sound to understand them better. He digitized a whole library of “whistlers,” which he recognized as radio signals from lightning discharges on Jupiter—the first evidence of lightning on another world.

“I was hearing clumps where the sounds were in harmony with each other. I was hearing solos from the various wavelengths of light.”

Kimberly Arcand

In the late 1990s, Kurth began experimenting with ways to translate those sounds of space into versions that would make sense to a non-expert listener. The whistles and pops of distant planets caught the public imagination and became a staple of NASA press conferences.

Since then, NASA has increasingly embraced sonification to bring its publicly funded (and often expensive) cosmological discoveries to the masses. One of the leaders in that effort is Kimberly Arcand at the Harvard-Smithsonian Center for Astrophysics. For the past five years, she has worked with NASA to develop audio versions of results from the Chandra X-ray Observatory, a Hubble-like space telescope that highlights energetic celestial objects and events, such as cannibal stars and supernova explosions.

Arcand’s space sonifications operate on two levels. To trained astronomers, they express well-defined data about luminosity, density, and motion. To the lay public, they capture the dynamic complexity of space scenes that are hard to appreciate from visuals alone. Radio shows and television news picked up these space soundscapes, sharing them widely. More recently, the sonifications became staples on YouTube and Soundcloud; collectively, they’ve been heard at least hundreds of millions of times. Just this spring, Chandra’s greatest hits were released as a vinyl LP, complete with its own record-release party.

“The first time I heard our finished Galactic Center data sonification, I experienced that data in a completely different way. I was hearing clumps where the sounds were in harmony with each other. I was hearing solos from the various wavelengths of light,” Arcand says. Researchers in other fields are increasingly embracing her approach. For instance, Stanford researchers have converted 1,200 years of climate data into sound in order to help the public comprehend the magnitude and pace of global warming.

Arcand’s short, accessible astronomy sonifications have been great for outreach to the general public, but she worries that they’ve had little impact in making science more accessible to blind and visually impaired people. (“Before I started as an undergrad, I hadn’t even heard them,” Kane confesses.) To assess the broader usefulness of her work, Arcand recently conducted a study of how blind or visually impaired people and non-BVI people respond to data sonification. The still-incomplete results indicate similar levels of interest and engagement in both groups. She takes that as a sign that such sonifications have a lot of untapped potential for welcoming a more diverse population into the sciences.

The bigger challenge, though, is what comes next: pretty sounds, like pretty pictures, are not much help for people with low vision who are drawn in by the outreach but then want to go deeper and do research themselves. In principle, astronomy could be an exceptionally accessible field, because it relies so heavily on pure data. Studying the stars does not necessarily involve lab work or travel. Even so, only a handful of BVI astronomers have managed to break past the barriers. Enrique Pérez Montero, who studies galaxy formation and does community outreach at Spain’s Instituto de Astrofísica de Andalucía, is one of a handful of success stories. Nicolas Bonne at the University of Portsmouth in the UK is another; he now develops both sound-based and tactile techniques for sharing his astronomical work.

Wanda Díaz-Merced is probably the world’s best-known BVI astronomer. But her career illustrates the magnitude of the challenges. She gradually lost her eyesight in her adolescence and early adulthood. Though she initially wondered whether she would be able to continue her studies, she persisted, and in 2005 she got an internship at NASA’s Goddard Space Flight Center, where she ended up collaborating with the computer scientist Robert Candey to develop data-sonification tools. Since then, she has continued her work at NASA, the University of Glasgow, the Harvard-Smithsonian Center for Astrophysics, the European Gravitational Observatory, the Astroparticle and Cosmology Laboratory in Paris, and the Universidad del Sagrado Corazón in Puerto Rico. At every step, she’s had to make her own way. “I’ve found sonification useful for all the data sets I’ve been able to analyze, from the solar wind to cosmic rays, radio astronomy, and x-ray data, but the accessibility of the databases is really bad,” she says. “Proposals for mainstreaming sonification are never approved—at least not the ones I have written.”

Jenn Kotler, a user experience designer at the Space Telescope Science Institute (STScI), became obsessed with this problem after hearing a lecture by Garry Foran, a blind chemist who reinvented himself as an astronomer using early sonification tools. Kotler wondered if she could do better and, in collaboration with two colleagues, applied for a grant from STScI to develop a dedicated kit for converting astronomical data into sound. They were funded, and in 2020, just as the covid pandemic began, Kotler and company began building what became Astronify.

“Our goal with Astronify was to have a tool that allows people to write scripts, pull in the data they’re interested in, and sonify it according to their own parameters,” Kotler says. One of the simplest applications would be to translate data indicating the change in brightness of an object, such as when a planet passes in front of a distant star, with decreased brightness expressed as lower pitch. After hearing concerns about the lack of standards on what different types of sounds should indicate, Kotler worked with a panel of blind and visually impaired test users. “As soon as we started developing Astronify, we wanted them involved,” she says. It was the kind of community input that had mostly been lacking in earlier, outreach-oriented sonifications designed by sighted researchers and primarily aimed at sighted users.

Astronify is now a complete, freely available open-source package. So far its user base is tiny (fewer than 50 people, according to Kotler), but she sees Astronify as a crucial step toward much broader accessibility in science. “It’s still so early with sonification, and frankly not enough actual research is being done about how best to use it,” she says.

In principle, astronomy could be an exceptionally accessible field, because it relies so heavily on pure data. Even so, only a handful of BVI astronomers have managed to break past the barriers.

One of her goals is to expand her sonification effort to create auditory “thumbnails” of all the different types of data stored in the Mikulski Archive for Space Telescopes, a super-repository that includes results from the Hubble and James Webb space telescopes along with many other missions and data archives. Making that collection searchable via sound would greatly improve the accessibility of a leading data science repository, Kotler notes, and would establish a template for other fields to follow.

Kotler also shares ideas with like-minded researchers and data scientists (such as James Trayford at the University of Portsmouth, who has collaborated with Bonne on a sonification package called STRAUSS) through a three-year-old international organization called Sonification World Chat. Arcand participates as well, seeking ways to apply the intuitive nature of her cosmic outreach to the harder task of making research data accessible to the BVI community. She notes that sonification is especially useful for interpreting any measurement that changes over time—a type of data that exists in pretty much every research field. “Astronomy is the main chunk of folks in the chat, but there are people from geology, oceanography, and climate change too,” she says.

The broader goal of groups like Sonification World Chat is to tear down the walls between tools like Astronify, which are powerful but useful only to a specialized community, and general-purpose sonifications like spoken GPS on phones, which are beneficial to a wide variety of people but only in very limited ways.

Rönnberg focuses a lot of his attention on dual-use efforts where data sonification is broadly helpful in a specific setting or occupation but could have accessibility applications as a side effect. In one project, he has explored the potential of sonified data for air traffic control, collaborating with the Air Navigation Services of Sweden. His team experimented with sounds to indicate when an airplane is entering a certain controller’s sector, for instance, or to provide 360-degree awareness that is difficult to convey visually. Thinking about a more familiar transportation issue, Rönnberg is working on a test project for sonified buses that identify themselves and indicate their route as they pull in to a stop. Additional sonic displays could mark the locations of the different doors and indicate which ones are accessible, a feature useful to passengers whether they see well or not.

Dual use is also a guiding theme for Kyla McMullen, who runs the SoundPAD Lab at the University of Florida (the PAD stands for “perception, application, and development”). She is working with the Gainesville Fire Department to test a system that uses sound to help firefighters navigate through smoke-filled buildings. In that situation, everyone is visually impaired. Like Rönnberg, McMullen sees a huge opportunity for data sonification to make urban environments more accessible. Another of her projects builds on GPS, adding three-dimensional sounds—signals that seem to originate from a specific direction. The goal is to create sonic pointers to guide people intuitively through an unfamiliar location or neighborhood. “Mobility is a big area for progress—number one on my list,” she says.

Walker, who has been working on data sonification for more than three decades, is trying to make the most of changing technology. “What we’re seeing,” he says, “is we develop something that becomes more automated or easier to use, and then as a result, it makes it easier for people with disabilities.” He has worked with Bloomberg to display auditory financial data on the company’s terminals, and with NASA to create standards for a sonified workstation. Walker is also exploring ways to make everyday tech more accessible. For instance, he notes that the currently available screen readers for cell phones fail to capture many parts of the social media experience. So he is working with one of his students to generate sonified emojis “to convey the actual emotion behind a message.” Last year they tested the tool with 75 sighted and BVI subjects, who provided mostly positive feedback.

Education may be the most important missing link between general-purpose assistive sounds and academic-oriented sonification. Getting sound into education hasn’t been easy, Walker acknowledges, but he thinks the situation is getting better here, too. “We’re seeing many more online and web-based tools, like our Sonification Studio, that don’t require special installations or a lot of technical support. They’re more like ‘walk up and use,’” he says. “It’s coming.”

Sonification Studio generates audio versions of charts and graphs for teaching or for analysis. Other prototype education projects use sonification to help students understand protein structures and human anatomy. At the most recent virtual meeting of the Sonification World Chat, members also presented general-purpose tools for sonifying scientific data and mathematical formulas, and for teaching BVI kids basic skills in data interpretation. Phia Damsma, who oversees the World Chat’s learning group, runs an Australian educational software company that focuses on sonification for BVI students. The number of such efforts has increased sharply over the past decade: in a paper published recently in Nature, Anita Zanella at Italy’s Istituto Nazionale di Astrofisica and colleagues identified more than 100 sonification-based research and education projects in astronomy alone.

These latest applications of sonification are quickly getting real-world tests, aided by the proliferation of cloud-based software and ubiquitous sound-making computers, phones, and other devices. Díaz-Merced, who has struggled for decades to develop and share her own sonification tools, finally perceives signs of genuine progress for scientists from the BVI community. “There is still a lot of work to do,” she says. “But little by little, with scientific research on multisensorial perception that puts the person at the center, that work is beginning.”

Kane has used Astronify mainly as a tester, but she’s inspired to find that the sonified astronomical data it generates are also directly relevant to her galactic studies and formatted in a standard scientific software package, giving her a type of access that did not exist just three years ago. By the time she completes her PhD, she could be testing and conducting research with sonification tools that are built right into the primary research databases in her field. “It makes me feel hopeful that things have gotten so much better within my relatively short lifetime,” she says. “I’m really excited to see where things will go next.”

Corey S. Powell is a science writer, editor, and publisher based in Brooklyn, NY. He is the cofounder of OpenMind magazine.

Deep Dive

Culture

Meet the divers trying to figure out how deep humans can go

Figuring out how the human body can withstand underwater pressure has been a problem for over a century, but a ragtag band of divers is experimenting with hydrogen to find out.

China’s next cultural export could be TikTok-style short soap operas

These apps are betting on low-budget productions, two-minute episodes, scripts adapted from Chinese web novels, and an aggressive marketing strategy.

The tech that helps these herders navigate drought, war, and extremists

Shiny, high-tech solutions often miss the mark. Can a simpler data-distribution project be the answer?

Why Threads is suddenly popular in Taiwan

During Taiwan’s presidential election, Meta’s social network emerged as a surprise hit.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.