For 30 years, a single Japanese company called Ajinomoto has made billions producing this particular film. Competitors have struggled to outdo them, and today Ajinomoto has more than 90% of the market in the product, which is used in everything from laptops to data centers.

But now, a startup based in Berkeley, California, is embarking on a herculean effort to dethrone Ajinomoto and bring this small slice of the chipmaking supply chain back to the US.

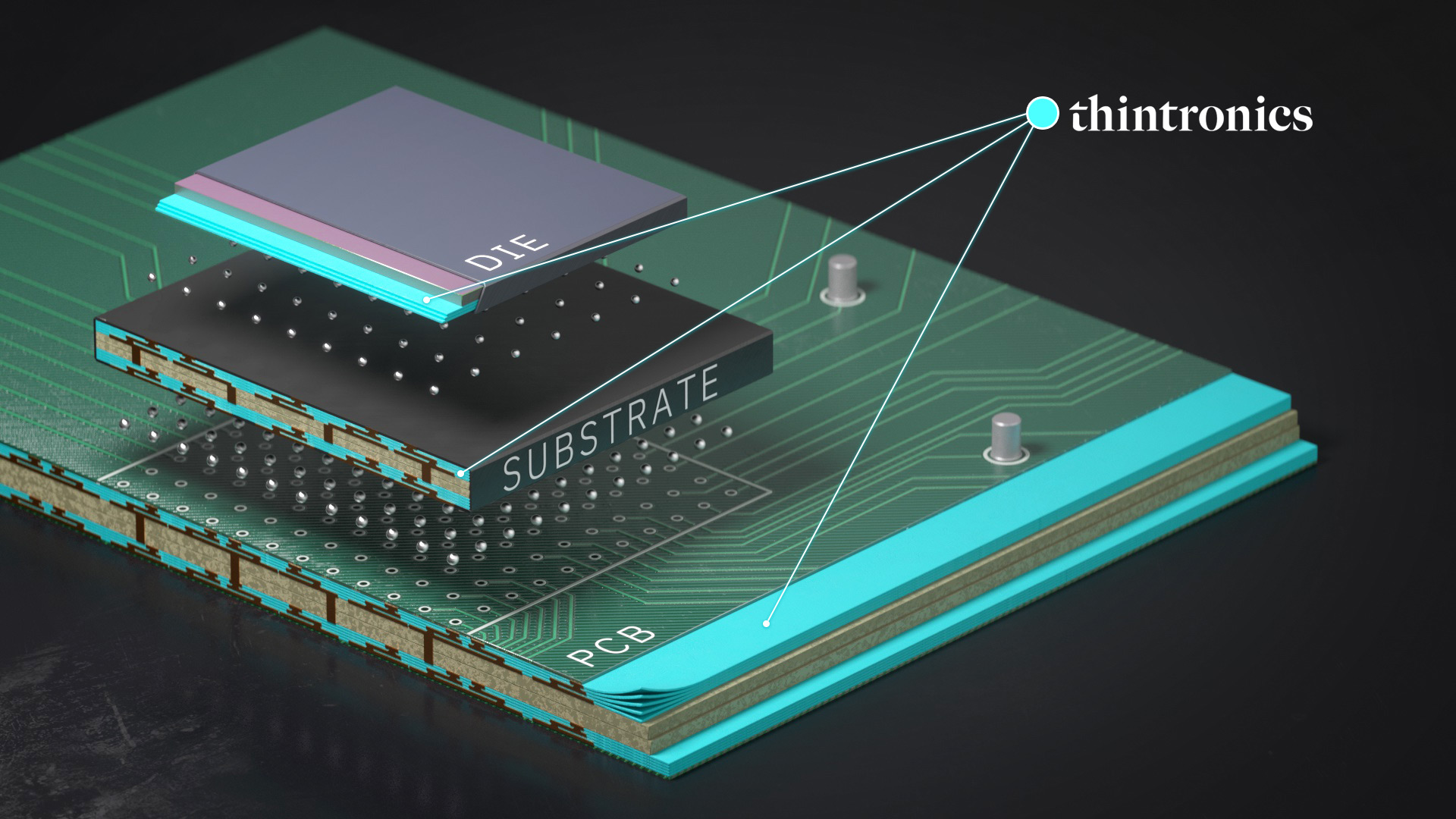

Thintronics is promising a product purpose-built for the computing demands of the AI era—a suite of new materials that the company claims have higher insulating properties and, if adopted, could mean data centers with faster computing speeds and lower energy costs.

The company is at the forefront of a coming wave of new US-based companies, spurred by the $280 billion CHIPS and Science Act, that is seeking to carve out a portion of the semiconductor sector, which has become dominated by just a handful of international players. But to succeed, Thintronics and its peers will have to overcome a web of challenges—solving technical problems, disrupting long-standing industry relationships, and persuading global semiconductor titans to accommodate new suppliers.

“Inventing new materials platforms and getting them into the world is very difficult,” Thintronics founder and CEO Stefan Pastine says. It is “not for the faint of heart.”

The insulator bottleneck

If you recognize the name Ajinomoto, you’re probably surprised to hear it plays a critical role in the chip sector: the company is better known as the world’s leading supplier of MSG seasoning powder. In the 1990s, Ajinomoto discovered that a by-product of MSG made a great insulator, and it has enjoyed a near monopoly in the niche material ever since.

But Ajinomoto doesn’t make any of the other parts that go into chips. In fact, the insulating materials in chips rely on dispersed supply chains: one layer uses materials from Ajinomoto, another uses material from another company, and so on, with none of the layers optimized to work in tandem. The resulting system works okay when data is being transmitted over short paths, but over longer distances, like between chips, weak insulators act as a bottleneck, wasting energy and slowing down computing speeds. That’s recently become a growing concern, especially as the scale of AI training gets more expensive and consumes eye-popping amounts of energy. (Ajinomoto did not respond to requests for comment.)

None of this made much sense to Pastine, a chemist who sold his previous company, which specialized in recycling hard plastics, to an industrial chemicals company in 2019. Around that time, he started to believe that the chemicals industry could be slow to innovate, and he thought the same pattern was keeping chipmakers from finding better insulating materials. In the chip industry, he says, insulators have “kind of been looked at as the redheaded stepchild”—they haven’t seen the progress made with transistors and other chip components.

He launched Thintronics that same year, with the hope that cracking the code on a better insulator could provide data centers with faster computing speeds at lower costs. That idea wasn’t groundbreaking—new insulators are constantly being researched and deployed—but Pastine believed that he could find the right chemistry to deliver a breakthrough.

Thintronics says it will manufacture different insulators for all layers of the chip, for a system designed to swap into existing manufacturing lines. Pastine tells me the materials are now being tested with a number of industry players. But he declined to provide names, citing nondisclosure agreements, and similarly would not share details of the formula.

Without more details, it’s hard to say exactly how well the Thintronics materials compare with competing products. The company recently tested its materials’ Dk values, which are a measure of how effective an insulator a material is. Venky Sundaram, a researcher who has founded multiple semiconductor startups but is not involved with Thintronics, reviewed the results. Some of Thintronics’ numbers were fairly average, he says, but their most impressive Dk value is far better than anything available today.

A rocky road ahead

Thintronics’ vision has already garnered some support. The company received a $20 million Series A funding round in March, led by venture capital firms Translink and Maverick, as well as a grant from the US National Science Foundation.

The company is also seeking funding from the CHIPS Act. Signed into law by President Joe Biden in 2022, it’s designed to boost companies like Thintronics in order to bring semiconductor manufacturing back to American companies and reduce reliance on foreign suppliers. A year after it became law, the administration said that more than 450 companies had submitted statements of interest to receive CHIPS funding for work across the sector.

The bulk of funding from the legislation is destined for large-scale manufacturing facilities, like those operated by Intel in New Mexico and Taiwan Semiconductor Manufacturing Corporation (TSMC) in Arizona. But US Secretary of Commerce Gina Raimondo has said she’d like to see smaller companies receive funding as well, especially in the materials space. In February, applications opened for a pool of $300 million earmarked specifically for materials innovation. While Thintronics declined to say how much funding it was seeking or from which programs, the company does see the CHIPS Act as a major tailwind.

But building a domestic supply chain for chips—a product that currently depends on dozens of companies around the globe—will mean reversing decades of specialization by different countries. And industry experts say it will be difficult to challenge today’s dominant insulator suppliers, who have often had to adapt to fend off new competition.

“Ajinomoto has been a 90-plus-percent-market-share material for more than two decades,” says Sundaram. “This is unheard-of in most businesses, and you can imagine they didn’t get there by not changing.”

One big challenge is that the dominant manufacturers have decades-long relationships with chip designers like Nvidia or Advanced Micro Devices, and with manufacturers like TSMC. Asking these players to swap out materials is a big deal.

“The semiconductor industry is very conservative,” says Larry Zhao, a semiconductor researcher who has worked in the dielectrics industry for more than 25 years. “They like to use the vendors they already know very well, where they know the quality.”

Another obstacle facing Thintronics is technical: insulating materials, like other chip components, are held to manufacturing standards so precise they are difficult to comprehend. The layers where Ajinomoto dominates are thinner than a human hair. The material must also be able to accept tiny holes, which house wires running vertically through the film. Every new iteration is a massive R&D effort in which incumbent companies have the upper hand given their years of experience, says Sundaram.

If all this is completed successfully in a lab, yet another hurdle lies ahead: the material has to retain those properties in a high-volume manufacturing facility, which is where Sundaram has seen past efforts fail.

“I have advised several material suppliers over the years that tried to break into [Ajinomoto’s] business and couldn’t succeed,” he says. “They all ended up having the problem of not being as easy to use in a high-volume production line.”

Despite all these challenges, one thing may be working in Thintronics’ favor: US-based tech giants like Microsoft and Meta are making headway in designing their own chips for the first time. The plan is to use these chips for in-house AI training as well as for the cloud computing capacity that they rent out to customers, both of which would reduce the industry’s reliance on Nvidia.

Though Microsoft, Google, and Meta declined to comment on whether they are pursuing advancements in materials like insulators, Sundaram says these firms could be more willing to work with new US startups rather than defaulting to the old ways of making chips: “They have a lot more of an open mind about supply chains than the existing big guys.”

]]>

In an executive IC role as a technical leader, I have a deep impact by looking at the intersections of systems across organizational boundaries, prioritize the problems that really need solving, then assemble stakeholders from across teams to create the best solutions.

Driving influence through expertise

People leaders typically have the benefit of an organization that scales with them. As an IC, you scale through the scope, complexity, and impact of the problems you help solve. The key to being effective is getting really good at identifying and structuring problems. You need to proactively identify the most impactful problems to solve—the ones that deliver the most value but that others aren’t focusing on—and structure them in a way that makes them easier to solve.

People skills are still important because building strong relationships with colleagues is fundamental. When consensus is clear, solving problems is straightforward, but when the solution challenges the status quo, it’s crucial to have established technical credibility and organizational influence.

And then there’s the fun part: getting your hands dirty. Choosing the IC path has allowed me to spend more time designing and building AI/ML systems than other management roles would—prototyping, experimenting with new tools and techniques, and thinking deeply about our most complex technical challenges.

A great example I’ve been fortunate to work on involved designing the structure of a new ML-driven platform. It required significant knowledge at the cutting edge and touched multiple other parts of the organization. The freedom to structure my time as an IC allowed me to dive deep in the domain, understand the technical needs of the problem space, and scope the approach. At the same time, I worked across multiple enterprise and line-of-business teams to align appropriate resources and define solutions that met the business needs of our partners. This allowed us to deliver a cutting-edge solution on a very short timescale to help the organization safely scale a new set of capabilities.

Being an IC lets you operate more like a surgeon than a general. You focus your efforts on precise, high-leverage interventions. Rapid, iterative problem-solving is what makes the role impactful and rewarding.

The keys to success as an IC executive

In an IC executive role, there are key skills that are essential. First is maintaining deep technical expertise. I usually have a couple of different lines of study going on at any given time, one that’s closely related to the problems I’m currently working on, and another that takes a long view on foundational knowledge that will help me in the future.

Second is the ability to proactively identify and structure high-impact problems. That means developing a strong intuition for where AI/ML can drive the most business value, and leveraging the problem in a way that achieves the highest business results.

Determining how the problem will be formulated means considering what specific problem you are trying to solve and what you are leaving off the table. This intentional approach aligns the right complexity level to the problem to meet the organization’s needs with the minimum level of effort. The next step is breaking down the problem into chunks that can be solved by the people or teams aligned to the effort.

Doing this well requires building a diverse network across the organization. Building and nurturing relationships in different functional areas is crucial to IC success, giving you the context to spot impactful problems and the influence to mobilize resources to address them.

Finally, you have to be an effective communicator who can translate between technical and business audiences. Executives need you to contextualize system design choices in terms of business outcomes and trade-offs. And engineers need you to provide crisp problem statements and solution sketches.

It’s a unique mix of skills, but if you can cultivate that combination of technical depth, organizational savvy, and business-conscious communication, ICs can drive powerful innovations. And you can do it while preserving the hands-on problem-solving abilities that likely drew you to engineering in the first place.

Empowering IC Career Paths

As the fields of AI/ML evolve, there’s a growing need for senior ICs who can provide technical leadership. Many organizations are realizing that they need people who can combine deep expertise with strategic thinking to ensure these technologies are being applied effectively.

However, many companies are still figuring out how to empower and support IC career paths. I’m fortunate that Capital One has invested heavily in creating a strong Distinguished Engineer community. We have mentorship, training, and knowledge-sharing structures in place to help senior ICs grow and drive innovation.

ICs have more freedom than most to craft their own job description around their own preferences and skill sets. Some ICs may choose to focus on hands-on coding, tackling deeply complex problems within an organization. Others may take a more holistic approach, examining how teams intersect and continually collaborating in different areas to advance projects. Either way, an IC needs to be able to see the organization from a broad perspective, and know how to spot the right places to focus their attention.

Effective ICs also need the space and resources to stay on the bleeding edge of their fields. In a domain like AI/ML that’s evolving so rapidly, continuous learning and exploration are essential. It’s not a nice-to-have feature, but a core part of the job, and since your time as an individual doesn’t scale, it requires dedication to time management.

Shaping the future

The role of an executive IC in engineering is all about combining deep technical expertise with a strategic mindset. That’s a key ingredient in the kind of transformational change that AI is driving, but realizing this potential will require a shift in the way many organizations think about leadership.

I’m excited to see more engineers pursue an IC path and bring their unique mix of skills to bear on the toughest challenges in AI/ML. With the right organizational support, I believe a new generation of IC leaders will emerge and help shape the future of the field. That’s the opportunity ahead of us, and I’m looking forward to leading by doing.

This content was produced by Capital One. It was not written by MIT Technology Review’s editorial staff.

]]>Is robotics about to have its own ChatGPT moment?

Henry and Jane Evans are used to awkward houseguests. For more than a decade, the couple, who live in Los Altos Hills, California, have hosted a slew of robots in their home.

In 2002, at age 40, Henry had a massive stroke, which left him with quadriplegia and an inability to speak. While they’ve experimented with many advanced robotic prototypes in a bid to give Henry more autonomy, it’s one recent model that works in tandem with AI models that has made the biggest changes—helping to brush his hair, and opening up his relationship with his granddaughter.

A new generation of scientists and inventors believes that the previously missing ingredient of AI can give robots the ability to learn new skills and adapt to new environments faster than ever before. This new approach, just maybe, can finally bring robots out of the factory and into our homes. Read the full story.

—Melissa Heikkilä

Melissa’s story is from the next magazine issue of MIT Technology Review, set to go live on April 24, on the theme of Build. If you don’t subscribe already, sign up now to get a copy when it lands.

The inadvertent geoengineering experiment that the world is now shutting off

The news: When we talk about climate change, the focus is usually on the role that greenhouse-gas emissions play in driving up global temperatures, and rightly so. But another important, less-known phenomenon is also heating up the planet: reductions in other types of pollution.

In a nutshell: In particular, the world’s power plants, factories, and ships are pumping much less sulfur dioxide into the air, thanks to an increasingly strict set of global pollution regulations. Sulfur dioxide creates aerosol particles in the atmosphere that can directly reflect sunlight back into space or act as the “condensation nuclei” around which cloud droplets form. More or thicker clouds, in turn, also cast away more sunlight. So when we clean up pollution, we also ease this cooling effect.

Why it matters: Cutting air pollution has unequivocally saved lives. But as the world rapidly warms, it’s critical to understand the impact of pollution-fighting regulations on the global thermostat as well. Read the full story.

—James Temple

This story is from The Spark, our weekly climate and energy newsletter. Sign up to receive it in your inbox every Wednesday.

The must-reads

I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology.

1 Election workers are worried about AI

Generative models could make it easier for election deniers to spam offices. (Wired $)

+ Eric Schmidt has a 6-point plan for fighting election misinformation. (MIT Technology Review)

2 Apple has warned users in 92 countries of mercenary spyware attacks

It said it had high confidence that the targets were at genuine risk. (TechCrunch)

3 The US is in desperate need of chip engineers

Without them, it can’t meet its lofty semiconductor production goals. (WSJ $)

+ Taiwanese chipmakers are looking to expand overseas. (FT $)

+ How ASML took over the chipmaking chessboard. (MIT Technology Review)

4 Meet the chatbot tutors

Tens of thousands of gig economy workers are training tomorrow’s models. (NYT $)

+ Adobe is paying photographers $120 per video to train its generator. (Bloomberg $)

+ The next wave of AI coding tools is emerging. (IEEE Spectrum)

+ The people paid to train AI are outsourcing their work… to AI. (MIT Technology Review)

5 The Middle East is rushing to build AI infrastructure

Both Saudi Arabia and the UAE see sprawling data centers as key to becoming the region’s AI superpower. (Bloomberg $)

6 Political content creators and activists are lobbying Meta

They claim the company’s decision to limit the reach of ‘political’ content is threatening their livelihoods. (WP $)

7 The European Space Agency is planning an artificial solar eclipse

The mission, due to launch later this year, should provide essential insight into the sun’s atmosphere. (IEEE Spectrum)

8 How AI is helping to recover Ireland’s marginalized voices

Starting with the dung queen of Dublin. (The Guardian)

+ How AI is helping historians better understand our past. (MIT Technology Review)

9 Video game history is vanishing before our eyes

As consoles fall out of use, their games are consigned to history too. (FT $)

10 Dating apps are struggling to make looking for love fun

Charging users seems counterintuitive, then. (The Atlantic $)

+ Here’s how the net’s newest matchmakers help you find love. (MIT Technology Review)

Quote of the day

“We’re women sharing cool things with each other directly. You want it to go back to men running QVC?”

—Micah Enriquez, a successful ‘cleanfluencer,’ who shares cleaning tips and processes with her followers, feels criticism leveled at such content creators has a sexist element, she tells New York Magazine.

The big story

Is it possible to really understand someone else’s mind?

November 2023

Technically speaking, neuroscientists have been able to read your mind for decades. It’s not easy, mind you. First, you must lie motionless within a hulking fMRI scanner, perhaps for hours, while you watch films or listen to audiobooks.

None of this, of course, can be done without your consent; for the foreseeable future, your thoughts will remain your own, if you so choose. But if you do elect to endure claustrophobic hours in the scanner, the software will learn to generate a bespoke reconstruction of what you were seeing or listening to, just by analyzing how blood moves through your brain.

More recently, researchers have deployed generative AI tools, like Stable Diffusion and GPT, to create far more realistic, if not entirely accurate, reconstructions of films and podcasts based on neural activity.

But as exciting as the idea of extracting a movie from someone’s brain activity may be, it is a highly limited form of “mind reading.” To really experience the world through your eyes, scientists would have to be able to infer not just what film you are watching but also what you think about it, and how it makes you feel. And these interior thoughts and feelings are far more difficult to access. Read the full story.

—Grace Huckins

We can still have nice things

A place for comfort, fun and distraction to brighten up your day. (Got any ideas? Drop me a line or tweet ’em at me.)

+ Intrepid archaeologists have uncovered beautiful new frescos in the ruins of Pompeii.

+ This doughy jellyfish sure looks tasty.

+ A short rumination on literary muses, from Zelda Fitzgerald to Neal Cassady.

+ Grammar rules are made to be broken.

Silent. Rigid. Clumsy.

Henry and Jane Evans are used to awkward houseguests. For more than a decade, the couple, who live in Los Altos Hills, California, have hosted a slew of robots in their home.

In 2002, at age 40, Henry had a massive stroke, which left him with quadriplegia and an inability to speak. Since then, he’s learned how to communicate by moving his eyes over a letter board, but he is highly reliant on caregivers and his wife, Jane.

Henry got a glimmer of a different kind of life when he saw Charlie Kemp on CNN in 2010. Kemp, a robotics professor at Georgia Tech, was on TV talking about PR2, a robot developed by the company Willow Garage. PR2 was a massive two-armed machine on wheels that looked like a crude metal butler. Kemp was demonstrating how the robot worked, and talking about his research on how health-care robots could help people. He showed how the PR2 robot could hand some medicine to the television host.

“All of a sudden, Henry turns to me and says, ‘Why can’t that robot be an extension of my body?’ And I said, ‘Why not?’” Jane says.

There was a solid reason why not. While engineers have made great progress in getting robots to work in tightly controlled environments like labs and factories, the home has proved difficult to design for. Out in the real, messy world, furniture and floor plans differ wildly; children and pets can jump in a robot’s way; and clothes that need folding come in different shapes, colors, and sizes. Managing such unpredictable settings and varied conditions has been beyond the capabilities of even the most advanced robot prototypes.

That seems to finally be changing, in large part thanks to artificial intelligence. For decades, roboticists have more or less focused on controlling robots’ “bodies”—their arms, legs, levers, wheels, and the like—via purpose-driven software. But a new generation of scientists and inventors believes that the previously missing ingredient of AI can give robots the ability to learn new skills and adapt to new environments faster than ever before. This new approach, just maybe, can finally bring robots out of the factory and into our homes.

Progress won’t happen overnight, though, as the Evanses know far too well from their many years of using various robot prototypes.

PR2 was the first robot they brought in, and it opened entirely new skills for Henry. It would hold a beard shaver and Henry would move his face against it, allowing him to shave and scratch an itch by himself for the first time in a decade. But at 450 pounds (200 kilograms) or so and $400,000, the robot was difficult to have around. “It could easily take out a wall in your house,” Jane says. “I wasn’t a big fan.”

More recently, the Evanses have been testing out a smaller robot called Stretch, which Kemp developed through his startup Hello Robot. The first iteration launched during the pandemic with a much more reasonable price tag of around $18,000.

Stretch weighs about 50 pounds. It has a small mobile base, a stick with a camera dangling off it, and an adjustable arm featuring a gripper with suction cups at the ends. It can be controlled with a console controller. Henry controls Stretch using a laptop, with a tool that that tracks his head movements to move a cursor around. He is able to move his thumb and index finger enough to click a computer mouse. Last summer, Stretch was with the couple for more than a month, and Henry says it gave him a whole new level of autonomy. “It was practical, and I could see using it every day,” he says.

Using his laptop, he could get the robot to brush his hair and have it hold fruit kebabs for him to snack on. It also opened up Henry’s relationship with his granddaughter Teddie. Before, they barely interacted. “She didn’t hug him at all goodbye. Nothing like that,” Jane says. But “Papa Wheelie” and Teddie used Stretch to play, engaging in relay races, bowling, and magnetic fishing.

Stretch doesn’t have much in the way of smarts: it comes with some preinstalled software, such as the web interface that Henry uses to control it, and other capabilities such as AI-enabled navigation. The main benefit of Stretch is that people can plug in their own AI models and use them to do experiments. But it offers a glimpse of what a world with useful home robots could look like. Robots that can do many of the things humans do in the home—tasks such as folding laundry, cooking meals, and cleaning—have been a dream of robotics research since the inception of the field in the 1950s. For a long time, it’s been just that: “Robotics is full of dreamers,” says Kemp.

But the field is at an inflection point, says Ken Goldberg, a robotics professor at the University of California, Berkeley. Previous efforts to build a useful home robot, he says, have emphatically failed to meet the expectations set by popular culture—think the robotic maid from The Jetsons. Now things are very different. Thanks to cheap hardware like Stretch, along with efforts to collect and share data and advances in generative AI, robots are getting more competent and helpful faster than ever before. “We’re at a point where we’re very close to getting capability that is really going to be useful,” Goldberg says.

Folding laundry, cooking shrimp, wiping surfaces, unloading shopping baskets—today’s AI-powered robots are learning to do tasks that for their predecessors would have been extremely difficult.

Missing pieces

There’s a well-known observation among roboticists: What is hard for humans is easy for machines, and what is easy for humans is hard for machines. Called Moravec’s paradox, it was first articulated in the 1980s by Hans Moravec, thena roboticist at the Robotics Institute of Carnegie Mellon University. A robot can play chess or hold an object still for hours on end with no problem. Tying a shoelace, catching a ball, or having a conversation is another matter.

There are three reasons for this, says Goldberg. First, robots lack precise control and coordination. Second, their understanding of the surrounding world is limited because they are reliant on cameras and sensors to perceive it. Third, they lack an innate sense of practical physics.

“Pick up a hammer, and it will probably fall out of your gripper, unless you grab it near the heavy part. But you don’t know that if you just look at it, unless you know how hammers work,” Goldberg says.

On top of these basic considerations, there are many other technical things that need to be just right, from motors to cameras to Wi-Fi connections, and hardware can be prohibitively expensive.

Mechanically, we’ve been able to do fairly complex things for a while. In a video from 1957, two large robotic arms are dexterous enough to pinch a cigarette, place it in the mouth of a woman at a typewriter, and reapply her lipstick. But the intelligence and the spatial awareness of that robot came from the person who was operating it.

“The missing piece is: How do we get software to do [these things] automatically?” says Deepak Pathak, an assistant professor of computer science at Carnegie Mellon.

Researchers training robots have traditionally approached this problem by planning everything the robot does in excruciating detail. Robotics giant Boston Dynamics used this approach when it developed its boogying and parkouring humanoid robot Atlas. Cameras and computer vision are used to identify objects and scenes. Researchers then use that data to make models that can be used to predict with extreme precision what will happen if a robot moves a certain way. Using these models, roboticists plan the motions of their machines by writing a very specific list of actions for them to take. The engineers then test these motions in the laboratory many times and tweak them to perfection.

This approach has its limits. Robots trained like this are strictly choreographed to work in one specific setting. Take them out of the laboratory and into an unfamiliar location, and they are likely to topple over.

Compared with other fields, such as computer vision, robotics has been in the dark ages, Pathak says. But that might not be the case for much longer, because the field is seeing a big shake-up. Thanks to the AI boom, he says, the focus is now shifting from feats of physical dexterity to building “general-purpose robot brains” in the form of neural networks. Much as the human brain is adaptable and can control different aspects of the human body, these networks can be adapted to work in different robots and different scenarios. Early signs of this work show promising results.

Robots, meet AI

For a long time, robotics research was an unforgiving field, plagued by slow progress. At the Robotics Institute at Carnegie Mellon, where Pathak works, he says, “there used to be a saying that if you touch a robot, you add one year to your PhD.” Now, he says, students get exposure to many robots and see results in a matter of weeks.

What separates this new crop of robots is their software. Instead of the traditional painstaking planning and training, roboticists have started using deep learning and neural networks to create systems that learn from their environment on the go and adjust their behavior accordingly. At the same time, new, cheaper hardware, such as off-the-shelf components and robots like Stretch, is making this sort of experimentation more accessible.

Broadly speaking, there are two popular ways researchers are using AI to train robots. Pathak has been using reinforcement learning, an AI technique that allows systems to improve through trial and error, to get robots to adapt their movements in new environments. This is a technique that Boston Dynamics has also started using in its robot “dogs” called Spot.

Deepak Pathak’s team at Carnegie Mellon has used an AI technique called reinforcement learning to create a robotic dog that can do extreme parkour with minimal pre-programming.

In 2022, Pathak’s team used this method to create four-legged robot “dogs” capable of scrambling up steps and navigating tricky terrain. The robots were first trained to move around in a general way in a simulator. Then they were set loose in the real world, with a single built-in camera and computer vision software to guide them. Other similar robots rely on tightly prescribed internal maps of the world and cannot navigate beyond them.

Pathak says the team’s approach was inspired by human navigation. Humans receive information about the surrounding world from their eyes, and this helps them instinctively place one foot in front of the other to get around in an appropriate way. Humans don’t typically look down at the ground under their feet when they walk, but a few steps ahead, at a spot where they want to go. Pathak’s team trained its robots to take a similar approach to walking: each one used the camera to look ahead. The robot was then able to memorize what was in front of it for long enough to guide its leg placement. The robots learned about the world in real time, without internal maps, and adjusted their behavior accordingly. At the time, experts told MIT Technology Review the technique was a “breakthrough in robot learning and autonomy” and could allow researchers to build legged robots capable of being deployed in the wild.

Pathak’s robot dogs have since leveled up. The team’s latest algorithm allows a quadruped robot to do extreme parkour. The robot was again trained to move around in a general way in a simulation. But using reinforcement learning, it was then able to teach itself new skills on the go, such as how to jump long distances, walk on its front legs, and clamber up tall boxes twice its height. These behaviors were not something the researchers programmed. Instead, the robot learned through trial and error and visual input from its front camera. “I didn’t believe it was possible three years ago,” Pathak says.

In the other popular technique, called imitation learning, models learn to perform tasks by, for example, imitating the actions of a human teleoperating a robot or using a VR headset to collect data on a robot. It’s a technique that has gone in and out of fashion over decades but has recently become more popular with robots that do manipulation tasks, says Russ Tedrake, vice president of robotics research at the Toyota Research Institute and an MIT professor.

By pairing this technique with generative AI, researchers at the Toyota Research Institute, Columbia University, and MIT have been able to quickly teach robots to do many new tasks. They believe they have found a way to extend the technology propelling generative AI from the realm of text, images, and videos into the domain of robot movements.

The idea is to start with a human, who manually controls the robot to demonstrate behaviors such as whisking eggs or picking up plates. Using a technique called diffusion policy, the robot is then able to use the data fed into it to learn skills. The researchers have taught robots more than 200 skills, such as peeling vegetables and pouring liquids, and say they are working toward teaching 1,000 skills by the end of the year.

Many others have taken advantage of generative AI as well. Covariant, a robotics startup that spun off from OpenAI’s now-shuttered robotics research unit, has built a multimodal model called RFM-1. It can accept prompts in the form of text, image, video, robot instructions, or measurements. Generative AI allows the robot to both understand instructions and generate images or videos relating to those tasks.

The Toyota Research Institute team hopes this will one day lead to “large behavior models,” which are analogous to large language models, says Tedrake. “A lot of people think behavior cloning is going to get us to a ChatGPT moment for robotics,” he says.

In a similar demonstration, earlier this year a team at Stanford managed to use a relatively cheap off-the-shelf robot costing $32,000 to do complex manipulation tasks such as cooking shrimp and cleaning stains. It learned those new skills quickly with AI.

Called Mobile ALOHA (a loose acronym for “a low-cost open-source hardware teleoperation system”), the robot learned to cook shrimp with the help of just 20 human demonstrations and data from other tasks, such as tearing off a paper towel or piece of tape. The Stanford researchers found that AI can help robots acquire transferable skills: training on one task can improve its performance for others.

While the current generation of generative AI works with images and language, researchers at the Toyota Research Institute, Columbia University, and MIT believe the approach can extend to the domain of robot motion.

This is all laying the groundwork for robots that can be useful in homes. Human needs change over time, and teaching robots to reliably do a wide range of tasks is important, as it will help them adapt to us. That is also crucial to commercialization—first-generation home robots will come with a hefty price tag, and the robots need to have enough useful skills for regular consumers to want to invest in them.

For a long time, a lot of the robotics community was very skeptical of these kinds of approaches, says Chelsea Finn, an assistant professor of computer science and electrical engineering at Stanford University and an advisor for the Mobile ALOHA project. Finn says that nearly a decade ago, learning-based approaches were rare at robotics conferences and disparaged in the robotics community. “The [natural-language-processing] boom has been convincing more of the community that this approach is really, really powerful,” she says.

There is one catch, however. In order to imitate new behaviors, the AI models need plenty of data.

More is more

Unlike chatbots, which can be trained by using billions of data points hoovered from the internet, robots need data specifically created for robots. They need physical demonstrations of how washing machines and fridges are opened, dishes picked up, or laundry folded, says Lerrel Pinto, an assistant professor of computer science at New York University. Right now that data is very scarce, and it takes a long time for humans to collect.

Some researchers are trying to use existing videos of humans doing things to train robots, hoping the machines will be able to copy the actions without the need for physical demonstrations.

Pinto’s lab has also developed a neat, cheap data collection approach that connects robotic movements to desired actions. Researchers took a reacher-grabber stick, similar to ones used to pick up trash, and attached an iPhone to it. Human volunteers can use this system to film themselves doing household chores, mimicking the robot’s view of the end of its robotic arm. Using this stand-in for Stretch’s robotic arm and an open-source system called DOBB-E, Pinto’s team was able to get a Stretch robot to learn tasks such as pouring from a cup and opening shower curtains with just 20 minutes of iPhone data.

But for more complex tasks, robots would need even more data and more demonstrations.

The requisite scale would be hard to reach with DOBB-E, says Pinto, because you’d basically need to persuade every human on Earth to buy the reacher-grabber system, collect data, and upload it to the internet.

A new initiative kick-started by Google DeepMind, called the Open X-Embodiment Collaboration, aims to change that. Last year, the company partnered with 34 research labs and about 150 researchers to collect data from 22 different robots, including Hello Robot’s Stretch. The resulting data set, which was published in October 2023, consists of robots demonstrating 527 skills, such as picking, pushing, and moving.

Sergey Levine, a computer scientist at UC Berkeley who participated in the project, says the goal was to create a “robot internet” by collecting data from labs around the world. This would give researchers access to bigger, more scalable, and more diverse data sets. The deep-learning revolution that led to the generative AI of today started in 2012 with the rise of ImageNet, a vast online data set of images. The Open X-Embodiment Collaboration is an attempt by the robotics community to do something similar for robot data.

Early signs show that more data is leading to smarter robots. The researchers built two versions of a model for robots, called RT-X, that could be either run locally on individual labs’ computers or accessed via the web. The larger, web-accessible model was pretrained with internet data to develop a “visual common sense,” or a baseline understanding of the world, from the large language and image models.

When the researchers ran the RT-X model on many different robots, they discovered that the robots were able to learn skills 50% more successfully than in the systems each individual lab was developing.

“I don’t think anybody saw that coming,” says Vincent Vanhoucke, Google DeepMind’s head of robotics. “Suddenly there is a path to basically leveraging all these other sources of data to bring about very intelligent behaviors in robotics.”

Many roboticists think that large vision-language models, which are able to analyze image and language data, might offer robots important hints as to how the surrounding world works, Vanhoucke says. They offer semantic clues about the world and could help robots with reasoning, deducing things, and learning by interpreting images. To test this, researchers took a robot that had been trained on the larger model and asked it to point to a picture of Taylor Swift. The researchers had not shown the robot pictures of Swift, but it was still able to identify the pop star because it had a web-scale understanding of who she was even without photos of her in its data set, says Vanhoucke.

Vanhoucke says Google DeepMind is increasingly using techniques similar to those it would use for machine translation to translate from English to robotics. Last summer, Google introduced a vision-language-action model called RT-2. This model gets its general understanding of the world from online text and images it has been trained on, as well as its own interactions in the real world. It translates that data into robotic actions. Each robot has a slightly different way of translating English into action, he adds.

“We increasingly feel like a robot is essentially a chatbot that speaks robotese,” Vanhoucke says.

Baby steps

Despite the fast pace of development, robots still face many challenges before they can be released into the real world. They are still way too clumsy for regular consumers to justify spending tens of thousands of dollars on them. Robots also still lack the sort of common sense that would allow them to multitask. And they need to move from just picking things up and placing them somewhere to putting things together, says Goldberg—for example, putting a deck of cards or a board game back in its box and then into the games cupboard.

But to judge from the early results of integrating AI into robots, roboticists are not wasting their time, says Pinto.

“I feel fairly confident that we will see some semblance of a general-purpose home robot. Now, will it be accessible to the general public? I don’t think so,” he says. “But in terms of raw intelligence, we are already seeing signs right now.”

Building the next generation of robots might not just assist humans in their everyday chores or help people like Henry Evans live a more independent life. For researchers like Pinto, there is an even bigger goal in sight.

Home robotics offers one of the best benchmarks for human-level machine intelligence, he says. The fact that a human can operate intelligently in the home environment, he adds, means we know this is a level of intelligence that can be reached.

“It’s something which we can potentially solve. We just don’t know how to solve it,” he says.

For Henry and Jane Evans, a big win would be to get a robot that simply works reliably. The Stretch robot that the Evanses experimented with is still too buggy to use without researchers present to troubleshoot, and their home doesn’t always have the dependable Wi-Fi connectivity Henry needs in order to communicate with Stretch using a laptop.

Even so, Henry says, one of the greatest benefits of his experiment with robots has been independence: “All I do is lay in bed, and now I can do things for myself that involve manipulating my physical environment.”

Thanks to Stretch, for the first time in two decades, Henry was able to hold his own playing cards during a match.

“I kicked everyone’s butt several times,” he says.

“Okay, let’s not talk too big here,” Jane says, and laughs.

]]>Usually when we talk about climate change, the focus is squarely on the role that greenhouse-gas emissions play in driving up global temperatures, and rightly so. But another important, less-known phenomenon is also heating up the planet: reductions in other types of pollution.

In particular, the world’s power plants, factories, and ships are pumping much less sulfur dioxide into the air, thanks to an increasingly strict set of global pollution regulations. Sulfur dioxide creates aerosol particles in the atmosphere that can directly reflect sunlight back into space or act as the “condensation nuclei” around which cloud droplets form. More or thicker clouds, in turn, also cast away more sunlight. So when we clean up pollution, we also ease this cooling effect.

Before we go any further, let me stress: cutting air pollution is smart public policy that has unequivocally saved lives and prevented terrible suffering.

The fine particulate matter produced by burning coal, gas, wood, and other biomatter is responsible for millions of premature deaths every year through cardiovascular disease, respiratory illnesses, and various forms of cancer, studies consistently show. Sulfur dioxide causes asthma and other respiratory problems, contributes to acid rain, and depletes the protective ozone layer.

But as the world rapidly warms, it’s critical to understand the impact of pollution-fighting regulations on the global thermostat as well. Scientists have baked the drop-off of this cooling effect into net warming projections for the coming decades, but they’re also striving to obtain a clearer picture of just how big a role declining pollution will play.

A new study found that reductions in emissions of sulfur dioxide and other pollutants are responsible for about 38%, as a middle estimate, of the increased “radiative forcing” observed on the planet between 2001 and 2019.

An increase in radiative forcing means that more energy is entering the atmosphere than leaving it, as Kerry Emanuel, a professor of atmospheric science at MIT, lays out in a handy explainer here. As that balance has shifted in recent decades, the difference has been absorbed by the oceans and atmosphere, which is what is warming up the planet.

The remainder of the increase is “mainly” attributable to continued rising emissions of heat-trapping greenhouse gases, says Øivind Hodnebrog, a researcher at the Center for International Climate and Environment Research in Norway and lead author of the paper, which relied on climate models, sea-surface temperature readings, and satellite observations.

The study underscores the fact that as carbon dioxide, methane, and other gases continue to drive up temperatures, parallel reductions in air pollution are revealing more of that additional warming, says Zeke Hausfather, a scientist at the independent research organization Berkeley Earth. And it’s happening at a point when, by most accounts, global warming is about to begin accelerating or has already started to do so. (There’s ongoing debate over whether researchers can yet detect that acceleration and whether the world is now warming faster than researchers had expected.)

Because of the cutoff date, the study did not capture a more recent contributor to these trends. Starting in 2020, under new regulations from the International Maritime Organization, commercial shipping vessels have also had to steeply reduce the sulfur content in fuels. Studies have already detected a decrease in the formation of “ship tracks,” or the lines of clouds that often form above busy shipping routes.

Again, this is a good thing in the most important way: maritime pollution alone is responsible for tens of thousands of early deaths every year. But even so, I have seen and heard of suggestions that perhaps we should slow down or alter the implementation of some of these pollution policies, given the declining cooling effect.

A 2013 study explored one way to potentially balance the harms and benefits. The researchers simulated a scenario in which the maritime industry would be required to use very low-sulfur fuels around coastlines, where the pollution has the biggest effect on mortality and health. But then the vessels would double the fuel’s sulfur content when crossing the open ocean.

In that hypothetical world, the cooling effect was a bit stronger and premature deaths declined by 69% with respect to figures at the time, delivering a considerable public health improvement. But notably, under a scenario in which low-sulfur fuels were required across the board, mortality declined by 96%, a difference of more than 13,000 preventable deaths every year.

Now that the rules are in place and the industry is running on low-sulfur fuels, intentionally reintroducing pollution over the oceans would be a far more controversial matter.

While society basically accepted for well over a century that ships were inadvertently emitting sulfur dioxide into the air, flipping those emissions back on for the purpose of easing global warming would amount to a form of solar geoengineering, a deliberate effort to tweak the climate system.

Many think such planetary interventions are far too powerful and unpredictable for us to muck around with. And to be sure, this particular approach would be one of the more ineffective, dangerous, and expensive ways to carry out solar geoengineering, if the world ever decided it should be done at all. The far more commonly studied concept is emitting sulfur dioxide high in the stratosphere, where it would persist for longer and, as a bonus, not be inhaled by humans.

On an episode of the Energy vs. Climate podcast last fall, David Keith, a professor at the University of Chicago who has closely studied the topic, said that it may be possible to slowly implement solar geoengineering in the stratosphere as a means of balancing out the reduced cooling occurring from sulfur dioxide emissions in the troposphere.

“The kind of solar geoengineering ideas that people are talking about seriously would be a thin wedge that would, for example, start replacing what was happening with the added warming we have from unmasking the aerosol cooling from shipping,” he said.

Positioning the use of solar geoengineering as a means of merely replacing a cruder form that the world was shutting down offers a somewhat different mental framing for the concept—though certainly not one that would address all the deep concerns and fierce criticisms.

Now read the rest of The Spark

Read more from MIT Technology Review’s archive:

Back in 2018, I wrote a piece about the maritime rules that were then in the works and the likelihood that they would fuel additional global warming, noting that we were “about to kill a massive, unintentional” experiment in solar geoengineering.

Another thing

Speaking of the concerns about solar geoengineering, late last week I published a deep dive into Harvard’s unsuccessful, decade-long effort to launch a high-altitude balloon to conduct a tiny experiment in the stratosphere. I asked a handful of people who were involved in the project or followed it closely for their insights into what unfolded, the lessons that can be drawn from the episode—and their thoughts on what it means for geoengineering research moving forward.

Keeping up with Climate

Yup, as the industry predicted (and common sense would suggest), this week’s solar eclipse dramatically cut solar power production across North America. But for the most part, grid operators were able to manage their systems smoothly, minus a few price spikes, thanks in part to a steady buildout of battery banks and the availability of other sources like natural gas and hydropower. (Heatmap)

There’s been a pile-up of bad news for Tesla in recent days. First, the company badly missed analyst expectations for vehicle deliveries during the first quarter. Then, Reuters reported that the EV giant has canceled plans for a low-cost, mass-market car. That may have something to do with the move to “prioritize the development of a robotaxi,” which the Wall Street Journal then wrote about. Over on X, Elon Musk denied the Reuters story, sort of—posting that “Reuters is lying (again).” But there’s a growing sense that his transformation into a “far-right activist” is exacting an increasingly high cost on his personal and business brands. (Wall Street Journal)

In a landmark ruling this week, the European Court of Human Rights determined that by not taking adequate steps to address the dangers of climate change, including increasingly severe heat waves that put the elderly at particular risk, Switzerland had violated the human rights of a group of older Swiss women who had brought a case against the country. Legal experts say the ruling creates a precedent that could unleash many similar cases across Europe. (The Guardian)

]]>It is no coincidence that this heightened attention to improving data capabilities coincides with interest in AI, especially generative AI, reaching a fever pitch. Indeed, supporting the development of AI models is among the top reasons the organizations in our research seek to modernize their data capabilities. But AI is not the only reason, or even the main one.

This report seeks to understand organizations’ objectives for their data modernization projects and how they are implementing such initiatives. To do so, it surveyed senior data and technology executives across industries. The research finds that many have made substantial progress and investment in data modernization. Alignment on data strategy and the goals of modernization appear to be far from complete in many organizations, however, leaving a disconnect between data and technology teams and the rest of the business. Data and technology executives and their teams can still do more to understand their colleagues’ data needs and actively seek their input on how to meet them.

Following are the study’s key findings:

AI isn’t the only reason companies are modernizing the data estate. Better decision-making is the primary aim of data modernization, with nearly half (46%) of executives citing this among their three top drivers. Support for AI models (40%) and for decarbonization (38%) are also major drivers of modernization, as are improving regulatory compliance (33%) and boosting operational efficiency (32%).

Data strategy is too often siloed from business strategy. Nearly all surveyed organizations recognize the importance of taking a strategic approach to data. Only 22% say they lack a fully developed data strategy. When asked if their data strategy is completely aligned with key business objectives, however, only 39% agree. Data teams can also do more to bring other business units and functions into strategy discussions: 42% of respondents say their data strategy was developed exclusively by the data or technology team.

Data strategy paves the road to modernization. It is probably no coincidence that most organizations (71%) that have embarked on data modernization in the past two years have had a data strategy in place for longer than that. Modernization goals require buy-in from the business, and implementation decisions need strategic guidance, lest they lead to added complexity or duplication.

Top data pain points are data quality and timeliness. Executives point to substandard data (cited by 41%) and untimely delivery (33%) as the facets of their data operations most in need of improvement. Incomplete or inaccurate data leads enterprise users to question data trustworthiness. This helps explain why the most common modernization measure taken by our respondents’ organizations in the past two years has been to review and upgrade data governance (cited by 45%).

Cross-functional teams and DataOps are key levers to improve data quality. Modern data engineering practices are taking root in many businesses. Nearly half of organizations (48%) are empowering cross-functional data teams to enforce data quality standards, and 47% are prioritizing implementing DataOps (cited by 47%). These sorts of practices, which echo the agile methodologies and product thinking that have become standard in software engineering, are only starting to make their way into the data realm.

Compliance and security considerations often hinder modernization. Compliance and security concerns are major impediments to modernization, each cited by 44% of the respondents. Regulatory compliance is mentioned particularly frequently by those working in energy, public sector, transport, and financial services organizations. High costs are another oft-cited hurdle (40%), especially among the survey’s smaller organizations.

This content was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Review’s editorial staff.

]]>Generative AI can turn your most precious memories into photos that never existed

As a six-year-old growing up in Barcelona, Spain, during the 1940s, Maria would visit a neighbor’s apartment in her building when she wanted to see her father. From there, she could try and try to catch a glimpse of him in the prison below, where he was locked up for opposing the dictatorship of Francisco Franco.

There is no photo of Maria on that balcony. But she can now hold something like it: a fake photo—or memory-based reconstruction, as the Barcelona-based design studio Domestic Data Streamers puts it—of the scene that a real photo might have captured.The studio uses generative image models, such as OpenAI’s DALL-E, to bring people’s memories to life.

The fake snapshots are blurred and distorted, but they can still rewind a lifetime in an instant. Read the full story.

—Will Douglas Heaven

Why China’s regulators are softening on its tech sector

Understanding the Chinese government’s decisions to bolster or suppress a certain technology is always a challenge. Why does it favor this sector instead of that one? What triggers officials to suddenly initiate a crackdown? The answers are never easy to come by.

Angela Huyue Zhang, a law professor in Hong Kong, has some suggestions. She spoke with Zeyi Yang, our China reporter, on how Chinese regulators almost always swing back and forth between regulating tech too much and not enough, how local governments have gone to great lengths to protect local tech companies, and why AI companies in China are receiving more government goodwill than other sectors today. Read the full story.

This story is from China Report, our weekly newsletter covering tech in China. Sign up to receive it in your inbox every Tuesday.

+ Read more about Zeyi’s conversation with Zhang and how to apply her insights to AI here.

The must-reads

I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology.

1 We need new ways to evaluate how safe an AI model is

Current assessment methods haven’t kept pace with the sector’s rapid development. (FT $)

+ Do AI systems need to come with safety warnings? (MIT Technology Review)

2 Japan has grand hopes of rebuilding its fallen chip industry

And a small farming town has a critical role to play. (NYT $)

+ Google is working on its own proprietary chips to power its AI. (WSJ $)

+ Intel also unveiled a new chip to rival Nvidia’s stranglehold on the industry. (Reuters)

3 Russia canceled the launch of its newest rocket

The failure means the country is lagging further behind its space rivals in China and the US. (Bloomberg $)

+ The US is retiring one of its most powerful rockets, the Delta IV Heavy. (Ars Technica)

4 Gaming giant Blizzard is returning to China

After hashing out a new deal with long-time partner NetEase. (WSJ $)

5 Volkswagen converted a former Golf factory to produce all-electric vehicles

Its success suggests that other factories could follow suit without major job losses. (NYT $)

+ Three frequently asked questions about EVs, answered. (MIT Technology Review)

6 OpenAI is limbering up to fight numerous lawsuits

By hiring some of the world’s top legal minds to fight claims it breached copyright law. (WP $)

+ AI models that are capable of “reasoning” are on the horizon—if you believe the hype. (FT $)

+ OpenAI’s hunger for data is coming back to bite it. (MIT Technology Review)

7 San Francisco’s marshlands urgently need more mud

A new project is optimistic that dumping sediment onto the bay floor can help. (Hakai Magazine)

+ Why salt marshes could help save Venice. (MIT Technology Review)

8 Scientists are using eDNA to track down soldiers’ remains

Unlike regular DNA, it’s the genetic material we’re all constantly shedding. (Undark Magazine)

+ How environmental DNA is giving scientists a new way to understand our world. (MIT Technology Review)

9 We may have finally solved a cosmic mystery

However, not all cosmologists are in agreement. (New Scientist $)

10 Social media loves angry music

Extreme emotions require a similarly intense soundtrack. (Wired $)

Quote of the day

“I would urge everyone to think of AI as a sword, not just a shield, when it comes to bad content.”

—Nick Clegg, Meta’s global affairs chief, plays up AI’s ability to prevent the spread of misinformation rather than propagate it, the Guardian reports.

The big story

Inside China’s unexpected quest to protect data privacy

August 2020

In the West, it’s widely believed that neither the Chinese government nor Chinese people care about privacy. In reality, this isn’t true.

Over the last few years the Chinese government has begun to implement privacy protections that in many respects resemble those in America and Europe today.

At the same time, however, it has ramped up state surveillance. This paradox has become a defining feature of China’s emerging data privacy regime, and raises a serious question: Can a system endure with strong protections for consumer privacy, but almost none against government snooping? Read the full story.

—Karen Hao

We can still have nice things

A place for comfort, fun and distraction to brighten up your day. (Got any ideas? Drop me a line or tweet ’em at me.)

+ The rare, blind, hairy mole is a sight to behold.

+ When science fiction authors speak, the world listens.

+ Great, now the cat piano is going to be stuck in my head all day.

+ How to speak to just about anyone—should you want to, that is.

If you’re a longtime subscriber to this newsletter, you know that I talk about China’s tech policies all the time. To me, it’s always a challenge to understand and explain the government’s decisions to bolster or suppress a certain technology. Why does it favor this sector instead of that one? What triggers officials to suddenly initiate a crackdown? The answers are never easy to come by.

So I was inspired after talking to Angela Huyue Zhang, a law professor in Hong Kong who’s coming to teach at the University of Southern California this fall, about her new book on interpreting the logic and patterns behind China’s tech regulations.

We talked about how the Chinese government almost always swings back and forth between regulating tech too much and not enough, how local governments have gone to great lengths to protect local tech companies, and why AI companies in China are receiving more government goodwill than other sectors today.

To learn more about Zhang’s fascinating interpretation of the tech regulations in China, read my story published today.

In this newsletter, I want to show you a particularly interesting part of the conversation we had, where Zhang expanded on how market overreactions to Chinese tech policies have become an integral part of the tech regulator’s toolbox today.

The capital markets, perpetually betting on whether tech companies are going to fare better or worse, are always looking for policy signals on whether China is going to start a new crackdown on certain technologies. As a result, they often overreact to every move by the Chinese government.

Zhang: “Investors are already very nervous. They see any sort of regulatory signal very negatively, which is what happened last December when a gaming regulator sent out a draft proposal to regulate and curb gaming activities. It just spooked the market. I mean, actually, that draft law is nothing particularly unusual. It’s quite similar to the previous draft circulated among the lawyers, and there are just a couple of provisions that need a little bit of clarity. But investors were just so panicked.”

That specific example saw nearly $80 billion wiped from the market value of China’s two top gaming companies. The drastic reaction actually forced China’s tech regulators to temporarily shelve the draft law to quell market pessimism.

Zhang: If you look at previous crackdowns, the biggest [damage] that these firms receive is not in the form of a monetary fine. It is in the form of the [changing] market sentiment.

What the agency did at that time was deliberately inflict reputational damage on [Alibaba] by making this surprise announcement on its website, even though it was just one sentence saying “We are investigating Alibaba for monopolistic practice.” But they already caused the market to panic. As soon as they made the announcement, it wiped off $100 billion market cap from this firm overnight. Compared with that, the ultimate fine of $2.8 billion [that Alibaba had to pay] is nothing.

China’s tech regulators use the fact that the stock market predictably overreacts to policy signals to discipline unruly tech companies with minimum effort.

Zhang: These agencies are very adept at inflicting reputational damage. That’s why the market sentiment is something that they like to [utilize], and that kind of thing tends to be ignored because people tend to fix any attention on the law.

But playing the market this way is risky. As in the previously mentioned example of the video-game policy, regulators can’t always control how significant the overreactions become, so they risk inflicting broader economic damage that they don’t want to be responsible for.

Zhang: They definitely learned how badly investors can react to their regulatory actions. And if anything, they are very cautious and nervous as well. I think they will be risk-averse in introducing harsh regulations.

I also think the economic downturn has dampened the voices of certain agencies that used to be very aggressive during the crackdown, like the Cyberspace Administration of China. Because it seems like what they did caused tremendous trauma for the Chinese economy.

The fear of causing negative economic fallout by introducing harsh regulatory measures means these government agencies may turn to softer approaches, Zhang says.

Zhang: Now, if they want to take a softer approach, they would have a cup of tea with these firms and say “Here’s what you can do.” So it’s a more consensual approach now than those surprise attacks.

Do you agree that Chinese regulators have learned to take a softer approach to disciplining tech companies? Let me know your thoughts at zeyi@technologyreview.com.

Now read the rest of China Report

Catch up with China

1. Covert Chinese accounts are pretending to be Trump supporters on social media and stoking domestic divisions ahead of November’s US election, taking a page out of the Russian playbook in 2016. (New York Times $)

2. Tesla canceled long-promised plans to release an inexpensive car. Its Chinese rivals are selling EV alternatives at less than one-third the price of the cheapest Teslas. (Reuters $)

3. Donghua Jinlong, a factory in China that makes a nutritional additive called “high-quality industrial-grade glycine,” has unexpectedly become a meme adored by TikTok users. No one really knows why. (You May Also Like)

4. Joe Tsai, Alibaba’s chairman, said in a recent interview that he believes Chinese AI firms lag behind US peers “by two years.” (South China Morning Post $)

5. At first glance, a hacker behind a multi-year attempt to hack supply chains seemed to come from China. But details about the hacker’s work hours suggest that countries in Eastern Europe or the Middle East could be the real culprit. (Wired $)

6. While visiting China, US Treasury Secretary Janet Yellen said that she would not rule out potential tariffs on China’s green energy exports, including products like solar panels and electric vehicles. (CNBC)

Lost in translation

Hong Kong’s food delivery scene used to be split between the German-owned platform Foodpanda and the UK-owned Deliveroo. But the Chinese giant Meituan has been working since May 2023 on cracking into the scene with its new app KeeTa, according to the Chinese publication Zhengu Lab. It has so far managed to capture over 20% of the market.

Both of Meituan’s rivals waive the delivery fee only for larger orders, which makes it hard for people to order food alone. So Meituan decided to position itself as the platform for solo diners by waiving delivery fees for most restaurants, saving users up to 30% in costs. To compete with the established players, the company also pays higher wages to delivery workers and charges lower commission fees to restaurants.

Compared with mainland China, Hong Kong has a tiny delivery market. But Meituan’s efforts here represent a first step as it works to expand into more countries overseas, the company has said.

One more thing

For some hard-to-explain reasons, every time US Treasury Secretary Janet Yellen visits China, Chinese social media becomes obsessed with what and how she eats during the trip. This week, people were zooming into a seven-second video of Yellen’s dinner to scrutinize … her chopstick skills. Whyyyyyyy?

]]>There is no photo of Maria on that balcony. But she can now hold something like it: a fake photo—or memory-based reconstruction, as the Barcelona-based design studio Domestic Data Streamers puts it—of the scene that a real photo might have captured. The fake snapshots are blurred and distorted, but they can still rewind a lifetime in an instant.

“It’s very easy to see when you’ve got the memory right, because there is a very visceral reaction,” says Pau Garcia, founder of Domestic Data Streamers. “It happens every time. It’s like, ‘Oh! Yes! It was like that!’”

Dozens of people have now had their memories turned into images in this way via Synthetic Memories, a project run by Domestic Data Streamers. The studio uses generative image models, such as OpenAI’s DALL-E, to bring people’s memories to life. Since 2022, the studio, which has received funding from the UN and Google, has been working with immigrant and refugee communities around the world to create images of scenes that have never been photographed, or to re-create photos that were lost when families left their previous homes.

Now Domestic Data Streamers is taking over a building next to the Barcelona Design Museum to record people’s memories of the city using synthetic images. Anyone can show up and contribute a memory to the growing archive, says Garcia.

Synthetic Memories could prove to be more than a social or cultural endeavor. This summer, the studio will start a collaboration with researchers to find out if its technique could be used to treat dementia.

Memorable graffiti

The idea for the project came from an experience Garcia had in 2014, when he was working in Greece with an organization that was relocating refugee families from Syria. A woman told him that she was not afraid of being a refugee herself, but she was afraid of her children and grandchildren staying refugees because they might forget their family history: where they shopped, what they wore, how they dressed.

Garcia got volunteers to draw the woman’s memories as graffiti on the walls of the building where the families were staying. “They were really bad drawings, but the idea for synthetic memories was born,” he says. Several years later, when Garcia saw what generative image models could do, he remembered that graffiti. ”It was one of the first things that came to mind,” he says.

The process that Garcia and his team have developed is simple. An interviewer sits down with a subject and gets the person to recall a specific scene or event. A prompt engineer with a laptop uses that recollection to write a prompt for a model, which generates an image.

His team has built up a kind of glossary of prompting terms that have proved to be good at evoking different periods in history and different locations. But there’s often some back and forth, some tweaks to the prompt, says Garcia: “You show the image generated from that prompt to the subject and they might say, ‘Oh, the chair was on that side’ or ‘It was at night, not in the day.’ You refine it until you get it to a point where it clicks.”

So far Domestic Data Streamers has used the technique to preserve the memories of people in various migrant communities, including Korean, Bolivian, and Argentine families living in São Paolo, Brazil. But it has also worked with a care home in Barcelona to see how memory-based reconstructions might help older people. The team collaborated with researchers in Barcelona on a small pilot with 12 subjects, applying the approach to reminiscence therapy—a treatment for dementia that aims to stimulate cognitive abilities by showing someone images of the past. Developed in the 1960s, reminiscence therapy has many proponents, but researchers disagree on how effective it is and how it should be done.

The pilot allowed the team to refine the process and ensure that participants could give informed consent, says Garcia. The researchers are now planning to run a larger clinical study in the summer with colleagues at the University of Toronto to compare the use of generative image models with other therapeutic approaches.

One thing they did discover in the pilot was that older people connected with the images much better if they were printed out. “When they see them on a screen, they don’t have the same kind of emotional relation to them,” says Garcia. “But when they could see it physically, the memory got much more important.”

Blurry is best

The researchers have also found that older versions of generative image models work better than newer ones. They started the project using two models that came out in 2022: DALL-E 2 and Stable Diffusion, a free-to-use generative image model released by Stability AI. These can produce images that are glitchy, with warped faces and twisted bodies. But when they switched to the latest version of Midjourney (another generative image model that can create more detailed images), the results did not click with people so well.

“If you make something super-realistic, people focus on details that were not there,” says Garcia. “If it’s blurry, the concept comes across better. Memories are a bit like dreams. They do not behave like photographs, with forensic details. You do not remember if the chair was red or green. You simply remember that there was a chair.”

The team has since gone back to using the older models. “For us, the glitches are a feature,” says Garcia. “Sometimes things can be there and not there. It’s kind of a quantum state in the images that works really well with memories.”

Sam Lawton, an independent filmmaker who is not involved with the studio, is excited by the project. He’s especially happy that the team will be looking at the cognitive effects of these images in a rigorous clinical study. Lawton has used generative image models to re-create his own memories. In a film he made last year, called Expanded Childhood, he used DALL-E to extend old family photos beyond their borders, blurring real childhood scenes with surreal ones.

“The effect exposure to this kind of generated imagery has on a person’s brain was what spurred me to make the film in the first place,” says Lawton. “I was not in a position to launch a full-blown research effort, so I pivoted to the kind of storytelling that’s most natural to me.”

Lawton’s work explores a number of questions: What will long-term exposure to AI-generated or altered images have on us? Can such images help reframe traumatic memories? Or do they create a false sense of reality that can lead to confusion and cognitive dissonance?

Lawton showed the images in Expanded Childhood to his father and included his comments in the film: “Something’s wrong. I don’t know what that is. Do I just not remember it?”

Garcia is aware of the dangers of confusing subjective memories with real photographic records. His team’s memory-based reconstructions are not meant to be taken as factual documents, he says. In fact, he notes that this is another reason to stick with the less photorealistic images produced by older versions of generative image models. “It is important to differentiate very clearly what is synthetic memory and what is photography,” says Garcia. “This is a simple way to show that.”

But Garcia is now worried that the companies behind the models might retire their previous versions. Most users look forward to bigger and better models; for Synthetic Memories, less can be more. “I’m really scared that OpenAI will close DALL-E 2 and we will have to use DALL-E 3,” he says.

]]>The views expressed in this video are those of the speakers, and do not represent any endorsement or sponsorship.

Is the open-source approach, which has democratized access to software, ensured transparency, and improved security for decades, now poised to have a similar impact on AI? We dissect the balance between collaboration and control, legal ramifications, ethical considerations, and innovation barriers as the AI industry seeks to democratize the development of large language models.

Explore more from Booz Allen Hamilton on the future of AI

About the speakers

Alison Smith, Director of Generative AI, Booz Allen Hamilton