The internet is about to get a lot safer

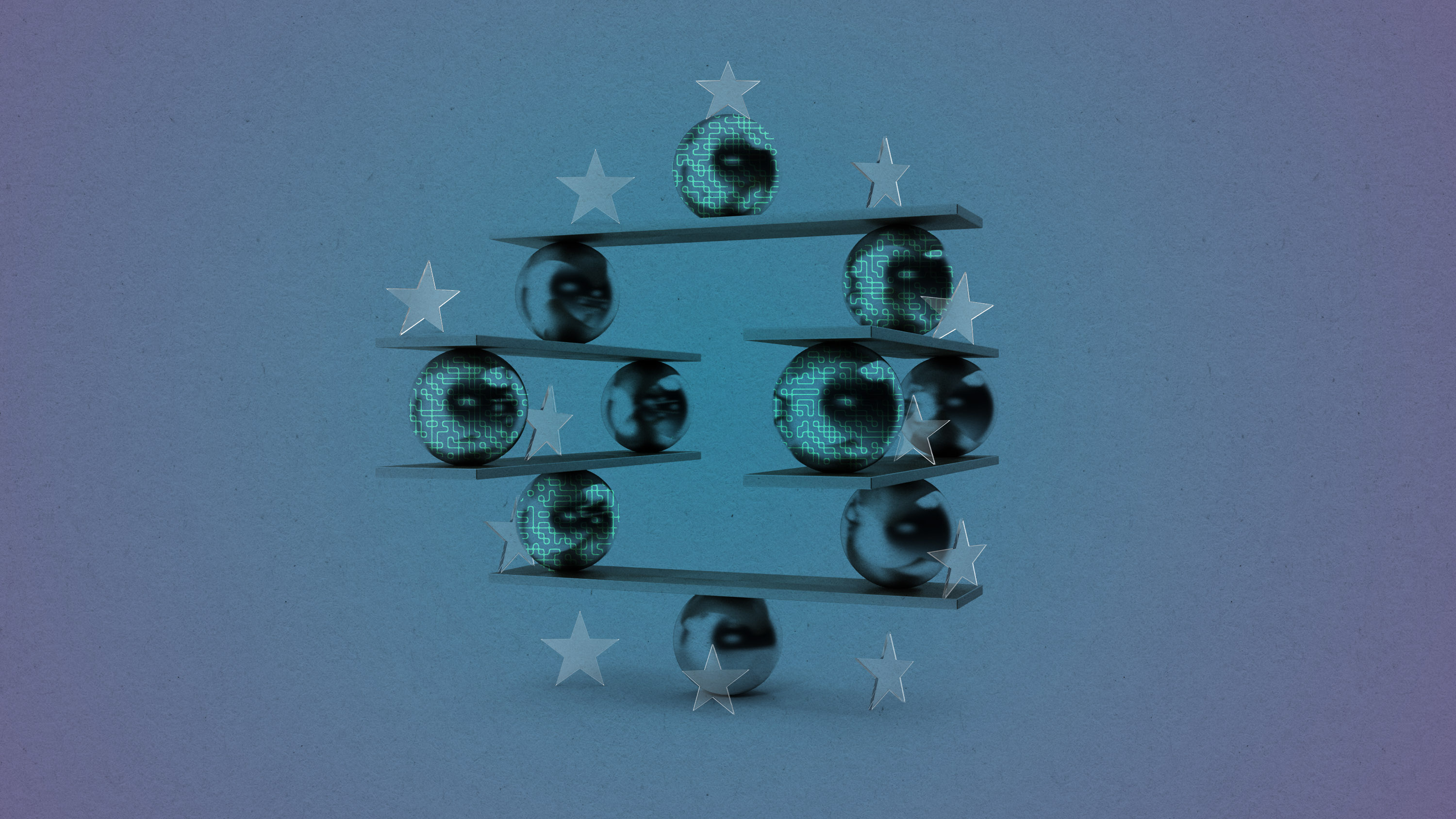

Europe's big tech bill is coming to fruition. Here's what you need to know.

This article is from The Technocrat, MIT Technology Review's weekly tech policy newsletter about power, politics, and Silicon Valley. To receive it in your inbox every Friday, sign up here.

If you use Google, Instagram, Wikipedia, or YouTube, you're going to start noticing changes to content moderation, transparency, and safety features on those sites over the next six months.

Why? It’s down to some major tech legislation that was passed in the EU last year but hasn’t received enough attention (IMO), especially in the US. I’m referring to a pair of bills called the Digital Services Act (DSA) and the Digital Markets Act (DMA), and this is your sign, as they say, to get familiar.

The acts are actually quite revolutionary, setting a global gold standard for tech regulation when it comes to user-generated content. The DSA deals with digital safety and transparency from tech companies, while the DMA addresses antitrust and competition in the industry. Let me explain.

A couple of weeks ago, the DSA reached a major milestone. By February 17, 2023, all major tech platforms in Europe were required to self-report their size, which was used to group the companies in different tiers. The largest companies, with over 45 million active monthly users in the EU (or roughly 10% of EU population), are creatively called “Very Large Online Platforms” (or VLOPs) or “Very Large Online Search Engines” (or VLOSEs) and will be held to the strictest standards of transparency and regulation. The smaller online platforms have far fewer obligations, which was part of a policy designed to encourage competition and innovation while still holding Big Tech to account.

“If you ask [small companies], for example, to hire 30,000 moderators, you will kill the small companies,” Henri Verdier, the French ambassador for digital affairs, told me last year.

So what will the DSA actually do? So far, at least 18 companies have declared that they qualify as VLOPs and VLOSEs, including most of the well-known players like YouTube, TikTok, Instagram, Pinterest, Google, and Snapchat. (If you want a whole list, London School of Economics law professor Martin Husovec has a great Google doc that shows where all the major players shake out and has written an accompanying explainer.)

The DSA will require these companies to assess risks on their platforms, like the likelihood of illegal content or election manipulation, and make plans for mitigating those risks with independent audits to verify safety. Smaller companies (those with under 45 million users) will also have to meet new content moderation standards that include “expeditiously” removing illegal content once flagged, notifying users of that removal, and increasing enforcement of existing company policies.

Proponents of the legislation say the bill will help bring an end to the era of tech companies’ self-regulating. “I don’t want the companies to decide what is and what isn’t forbidden without any separation of power, without any accountability, without any reporting, without any possibility to contest,” Verdier says. “It’s very dangerous.”

That said, the bill makes it clear that platforms aren’t liable for illegal user-generated content, unless they are aware of the content and fail to remove it.

Perhaps most important, the DSA requires that companies significantly increase transparency, through reporting obligations for “terms of service” notices and regular, audited reports about content moderation. Regulators hope this will have widespread impacts on public conversations around societal risks of big tech platforms like hate speech, misinformation, and violence.

What will you notice? You will be able to participate in content moderation decisions that companies make and formally contest them. The DSA will effectively outlaw shadow banning (the practice of deprioritizing content without notice), curb cyberviolence against women, and ban targeted advertising for users under 18. There will also be a lot more public data around how recommendation algorithms, advertisements, content, and account management work on the platforms, shedding new light on how the biggest tech companies operate. Historically, tech companies have been very hesitant to share platform data with the public or even with academic researchers.

What’s next? Now the European Commission (EC) will review the reported user numbers, and it has time to challenge or request more information from tech companies. One noteworthy issue is that porn sites were omitted from the “Very Large” category, which Husovec called “shocking.” He told me he thinks their reported user numbers should be challenged by the EC.

Once the size groupings are confirmed, the largest companies will have until September 1, 2023, to comply with the regulations, while smaller companies will have until February 17, 2024. Many experts anticipate that companies will roll out some of the changes to all users, not just those living in the EU. With Section 230 reform looking unlikely in the US, many US users will benefit from a safer internet mandated abroad.

What else I am reading about

More chaos, and layoffs, at Twitter.

- Elon has once again had a big news week after he laid off another 200 people, or 10% of Twitter’s remaining staff, over the weekend. These employees were presumably part of the “hard core” cohort who had agreed to abide by Musk’s aggressive working conditions.

- NetBlocks has reported four major outages of the site since the beginning of February.

Everyone is trying to make sense of the generative-AI hoopla.

- The FTC released a statement warning companies not to lie about the capabilities of their AIs. I also recommend reading this helpful piece from my colleague Melissa Heikkilä about how to use generative AI responsibly and this explainer about 10 legal and business risks of generative AI by Matthew Ferraro from Tech Policy Press.

- The dangers of the tech are already making news. This reporter broke into his bank account using an AI-generated voice.

There were more internet shutdowns than ever in 2022, continuing the trend of authoritarian censorship.

- This week, Access Now published its annual report that tracks shutdowns around the world. India, again, led the list with most shutdowns.

- Last year, I spoke with Dan Keyserling, who worked on the 2021 report, to learn more about how shutdowns are weaponized. During our interview, he told me, “Internet shutdowns are becoming more frequent. More governments are experimenting with curtailing internet access as a tool for affecting the behavior of citizens. The costs of internet shutdowns are arguably increasing both because governments are becoming more sophisticated about how they approach this, but also, we’re living more of our lives online.”

What I learned this week

Data brokers are selling mental-health data online, according to a new report from the Duke Cyber Policy Program. The researcher asked 37 data brokers for mental-health information, and 11 replied willingly. The report details how these select data brokers offered to sell information on depression, ADHD, and insomnia with little restriction. Some of the data was tied to people’s names and addresses.

In an interview with PBS, project lead Justin Sherman explained, “There are a range of companies who are not covered by the narrow health privacy regulations we have. And so they are free legally to collect and even share and sell this kind of health data, which enables a range of companies who can’t get at this normally—advertising firms, Big Pharma, even health insurance companies—to buy up this data and to do things like run ads, profile consumers, make determinations potentially about health plan pricing. And the data brokers enable these companies to get around health regulations.”

On March 3, the FTC announced a ban preventing the online mental health company BetterHelp from sharing people’s data with other companies.

Deep Dive

Policy

Is there anything more fascinating than a hidden world?

Some hidden worlds--whether in space, deep in the ocean, or in the form of waves or microbes--remain stubbornly unseen. Here's how technology is being used to reveal them.

What Luddites can teach us about resisting an automated future

Opposing technology isn’t antithetical to progress.

Africa’s push to regulate AI starts now

AI is expanding across the continent and new policies are taking shape. But poor digital infrastructure and regulatory bottlenecks could slow adoption.

Yes, remote learning can work for preschoolers

The largest-ever humanitarian intervention in early childhood education shows that remote learning can produce results comparable to a year of in-person teaching.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.