Robots that learn as they fail could unlock a new era of AI

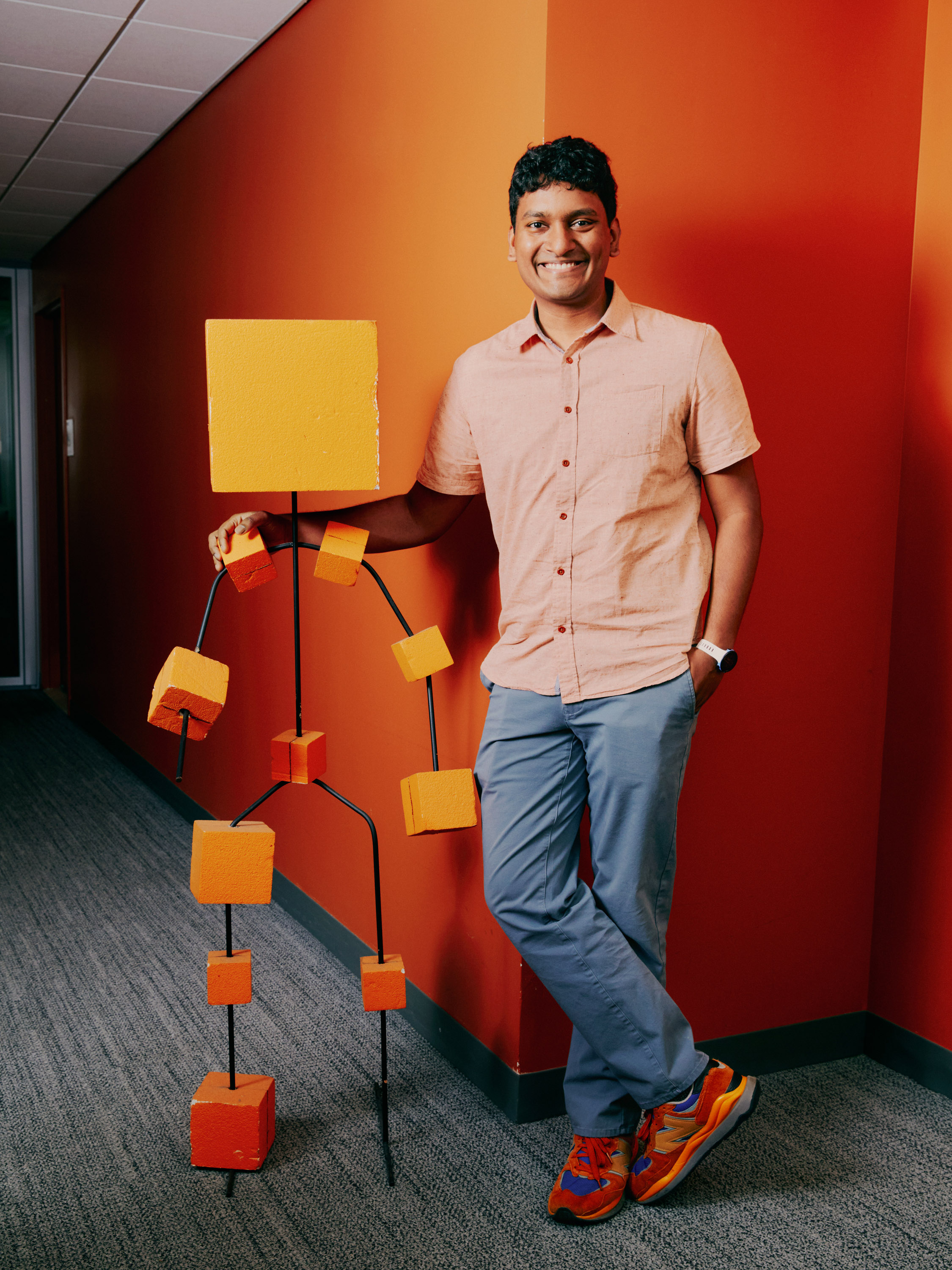

Lerrel Pinto says the key to building useful home robots is helping them learn from their mistakes.

Lerrel Pinto is one of MIT Technology Review’s 2023 Innovators Under 35.

Asked to explain his work, Lerrel Pinto, 31, likes to shoot back another question: When did you last see a cool robot in your home? The answer typically depends on whether the person asking owns a robot vacuum cleaner: yesterday or never.

Pinto’s working to fix that. A computer science researcher at New York University, he wants to see robots in the home that do a lot more than vacuum: “How do we actually create robots that can be a more integral part of our lives, doing chores, doing elder care or rehabilitation—you know, just being there when we need them?”

The problem is that training multiskilled robots requires lots of data. Pinto’s solution is to find novel ways to collect that data—in particular, getting robots to collect it as they learn, an approach called self-supervised learning (a technique also championed by Meta’s chief AI scientist and Pinto’s NYU colleague Yann LeCun, among others).

“Lerrel’s work is a major milestone in bringing machine learning and robotics together,” says Pieter Abbeel, director of the robot learning lab at the University of California, Berkeley. “His current research will be looked back upon as having laid many of the early building blocks of the future of robot learning.”

The idea of a household robot that can make coffee or wash dishes is decades old. But such machines remain the stuff of science fiction. Recent leaps forward in other areas of AI, especially large language models, made use of enormous data sets scraped from the internet. You can’t do that with robots, says Pinto.

Self-driving-car companies clock millions of hours on the road, collecting data to train the models that power their vehicles. Makers of household robots face a similar challenge, recording many hours of robot’s-eye footage of different tasks being carried out in different settings.

Pinto hit one of his first milestones back in 2016, when he created the world’s largest robotics data set at the time by getting robots to create and label their own training data and running them 24/7 without human supervision.

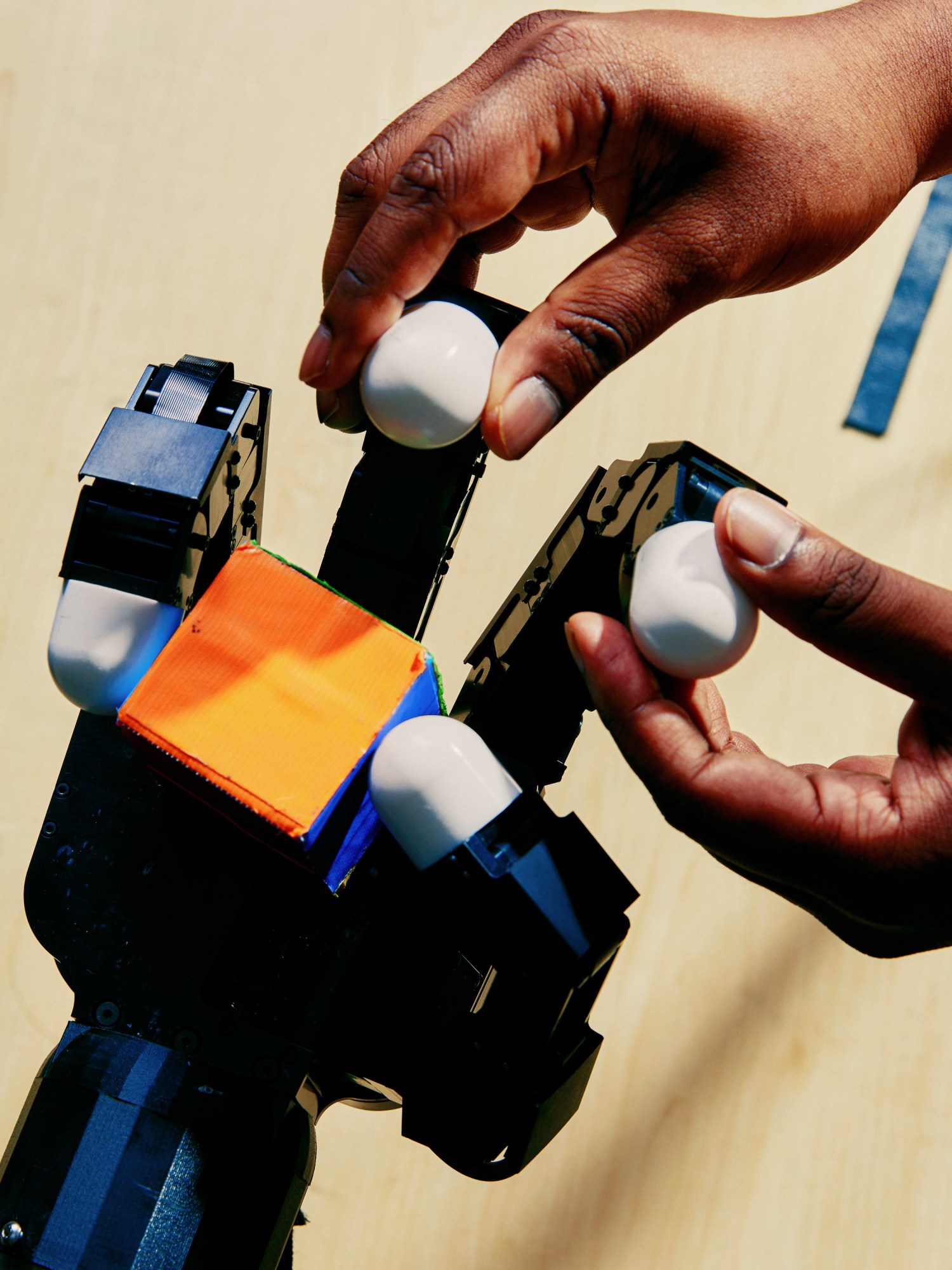

He and his colleagues have since developed learning algorithms that allow a robot to improve as it fails. A robot arm might fail many times to grasp an object, but the data from those attempts can be used to train a model that succeeds. The team has demonstrated this approach with both a robot arm and a drone, turning each dropped object or collision into a hard-won lesson.

Another approach Pinto is taking involves copying humans. A robot is shown a human opening a door. It takes this data as a starting point and tries to do it itself, once again adding to its data set as it goes. But the more doors the robot sees humans open, the more likely it is to succeed at opening a door it has never seen before.

Pinto’s most recent project is remarkably low-tech: he’s recruited a few dozen volunteers to record videos of themselves grabbing various objects around their homes, using iPhones mounted on $20 trash-picker tools. He thinks a couple hundred hours of footage should be enough to train a robust grasping model.

All this data collection is combined with efficient learning algorithms that let robots do more with less. Pinto and his colleagues have shown that dexterous behavior, such as opening a bottle with one hand or flipping a pancake, can be achieved with just an hour of training.

In effect, Pinto is hoping to give robots their large-language-model moment. In doing so, he could help unlock a whole new era in AI. “There’s this idea that the reason we have brains is to move,” he says. “It’s what evolution primed us to do to survive, to find food.

“Ultimately, I think the goal of intelligence is to move, to change things in the world, and I think the only things that can do that are physical creatures, like a robot.”

Lerrel Pinto is one of MIT Technology Review’s 2023 Innovators Under 35. Meet the rest of this year’s honorees.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

OpenAI teases an amazing new generative video model called Sora

The firm is sharing Sora with a small group of safety testers but the rest of us will have to wait to learn more.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

Responsible technology use in the AI age

AI presents distinct social and ethical challenges, but its sudden rise presents a singular opportunity for responsible adoption.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.